-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Resident Evil 7 - PC Performance Thread

- Thread starter Peterthumpa

- Start date

Self_Destructive

Member

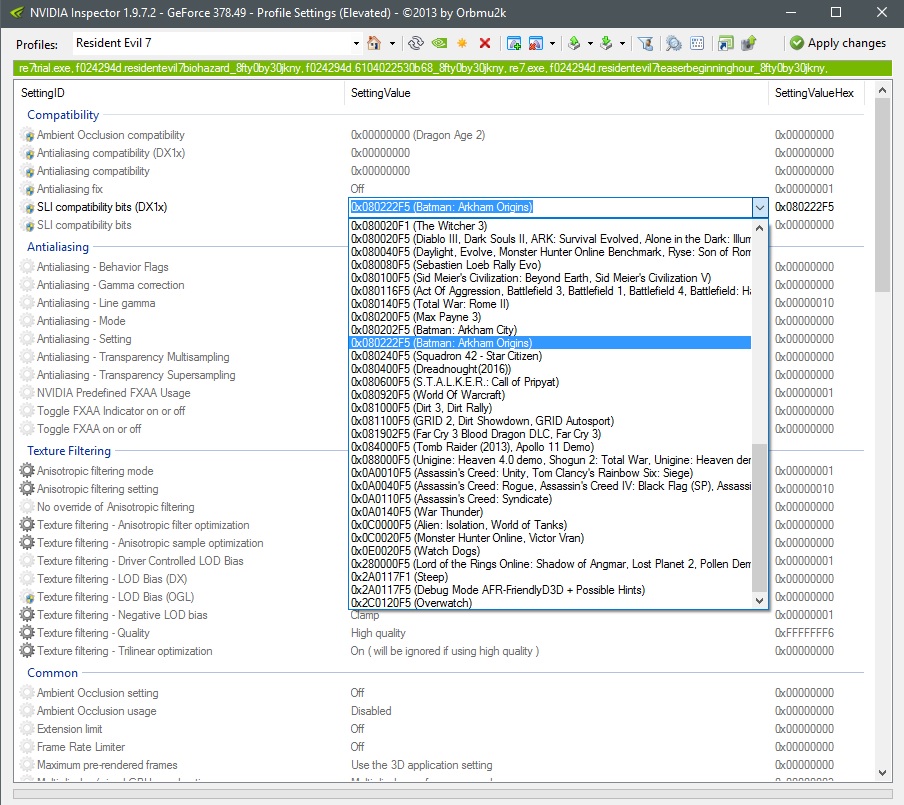

Those with SLI can get better performance using these bits using Nvidia Inspector.

Jesb

Member

I suck at this, might need to restart on easy. Can't downin the first area after sheMiacuts your hand off and attacks with a chainsaw in the attic.

I had trouble too. Until I went into the next room. You'll have more distance to play with. Fire away as much as you can in this room, than run away back in the attic area and she's ready to go down.

GTX960, i5-6600 on Windows 10 and the game is running like absolute shit, game doesn't stop freezing (sometimes for more than 10 seconds).

I download the latest drivers from Geforce Experience and it's still terrible. Is there any specific graphic option that's causing these issues? I didn't try reducing everything to low yet.

I download the latest drivers from Geforce Experience and it's still terrible. Is there any specific graphic option that's causing these issues? I didn't try reducing everything to low yet.

CGriffiths86

Member

Anyone here having issues with the game streaming to another PC or Steam Link?, every other game works in my library, but RE7 seems to go all black when launching the game.

No, I've only played it via my Steam Link so my wife could watch. It does get stuttery every once in a while and I can't say for sure if the game is stuttering or lag between the steam link and my PC.

Anyone here having issues with the game streaming to another PC or Steam Link?, every other game works in my library, but RE7 seems to go all black when launching the game.

I played the game from beginning to end on my steam link with no issues. I'm wired though.

I played the game from beginning to end on my steam link with no issues. I'm wired though.

I'm also wired, but it seems the game launches in windowed mode then goes to full screen a few seconds later, this might be messing with the stream itself?, does yours also do this ?

Very weird

I'm also wired, but it seems the game launches in windowed mode then goes to full screen a few seconds later, this might be messing with the stream itself?, does yours also do this ?

Very weird

Yeah mine seems to open a black window before it goes full screen also. For me it's not causing any issues with my Steam Link when it does that luckily.

Lord Phol

Member

I played the game from beginning to end on my steam link with no issues. I'm wired though.

Could you share your Host and Client settings? Most games work flawlessly for me on the steam link but this stutters like crazy on the TV, even though it's fine on the host/PC screen.

Could you share your Host and Client settings? Most games work flawlessly for me on the steam link but this stutters like crazy on the TV, even though it's fine on the host/PC screen.

These might just be the default settings, I'm not sure if I ever bothered to change anything.

The following are checked under advanced host options, everything else is unchecked:

-Adjust resolution to improve performance

-Enable hardware encoding

-Enable hardware encoding on Nvidia GPU

Number of software encoding threads set to automatic.

Client options: Beautiful

Advanced client options

-Limit bandwidth set to automatic

-Limit resolution set to display

-Speaker config set to automatic

-Hardware encoding checked

Coreda

Member

Why did they chart the 970 performance on the 1080p benchmarks yet neglect it for the 1440p+ scores? Even the 960 2GB gets a spot in all of them.

Aztechnology

Member

Why did they chart the 970 performance on the 1080p benchmarks yet neglect it for the 1440p+ scores? Even the 960 2GB gets a spot in all of them.

Guru 3D still doesn't even have the scores up for updated drivers on their main benchmark page. I have no idea what they are doing.

Those with SLI can get better performance using these bits using Nvidia Inspector.

I've been posting my SLI findings in here, curious if you guys have done any testing.

In particular I'd like to find a solution for the banding/flickering problem that occasionally showed up, and better options for AA. SMAA wasn't cutting it but I couldn't get any kind of forced AA to work. TAA goes all bonkers with SLI.

Coreda

Member

Guru 3D still doesn't even have the scores up for updated drivers on their main benchmark page. I have no idea what they are doing.

It's also puzzling as by Steam's own hardware surveys the card has held the most used position for a while so it's not as though it's irrelevant by any means.

ThePeoplesBureau

Member

Patch #1 Release Notes (Jan. 27th)

Community Announcements - WBacon [capcom]

The following issues have been addressed in today's update:

- Fixed an issue where saving game progress was no longer possible if the player deleted the local save file while Steam Cloud has been turned off.

- Fixed an issue where HDR mode is turned back to ON upon app launch if the player quits the prior game session in full screen mode and with HDR set to OFF.

Community Announcements - WBacon [capcom]

The following issues have been addressed in today's update:

- Fixed an issue where saving game progress was no longer possible if the player deleted the local save file while Steam Cloud has been turned off.

- Fixed an issue where HDR mode is turned back to ON upon app launch if the player quits the prior game session in full screen mode and with HDR set to OFF.

Lord Phol

Member

These might just be the default settings, I'm not sure if I ever bothered to change anything.

The following are checked under advanced host options, everything else is unchecked:

-Adjust resolution to improve performance

-Enable hardware encoding

-Enable hardware encoding on Nvidia GPU

Number of software encoding threads set to automatic.

Client options: Beautiful

Advanced client options

-Limit bandwidth set to automatic

-Limit resolution set to display

-Speaker config set to automatic

-Hardware encoding checked

Thanks! I'll do some tinkering and see what happens.

Running At 4K HDR on a Samsung KS8000

Specs

i7 7700K

GTX 1080

16GB

Evo 960 SSD

Getting as high as 60 FPS with these settings but seeing dips as low as 30FPS in some areas. Is there anything in these settings that can improve perfomance, if I enable Motion Blur or Depth of Field I see a massive perfomance hit.

Screen Resolution: 4k

Refresh Rate - 59

Display Mode - Full screen

Field of View - Default

Frame Rate: Variable

V-Sync ON

Rendering - Normal

Resolution Scaling - 1.0

Texture Quality - Very High

Texture Filtering -Very High

Mesh Quality - Very High

Anti-Aliasing - FXAA+TAA

Motion Blur - OFF

Effects Rendering - High.

Depth of Field - OFF

Shadow Quality - High.

Dynamic Shadow - ON

Shadow Cache OFF

Ambient Occlusion - SSAO

Specs

i7 7700K

GTX 1080

16GB

Evo 960 SSD

Getting as high as 60 FPS with these settings but seeing dips as low as 30FPS in some areas. Is there anything in these settings that can improve perfomance, if I enable Motion Blur or Depth of Field I see a massive perfomance hit.

Screen Resolution: 4k

Refresh Rate - 59

Display Mode - Full screen

Field of View - Default

Frame Rate: Variable

V-Sync ON

Rendering - Normal

Resolution Scaling - 1.0

Texture Quality - Very High

Texture Filtering -Very High

Mesh Quality - Very High

Anti-Aliasing - FXAA+TAA

Motion Blur - OFF

Effects Rendering - High.

Depth of Field - OFF

Shadow Quality - High.

Dynamic Shadow - ON

Shadow Cache OFF

Ambient Occlusion - SSAO

Mister Wolf

Member

Wish there was a option to turn off anti aliasing completely.

Running At 4K HDR on a Samsung KS8000

Specs

i7 7700K

GTX 1080

16GB

Evo 960 SSD

Getting as high as 60 FPS with these settings but seeing dips as low as 30FPS in some areas. Is there anything in these settings that can improve perfomance, if I enable Motion Blur or Depth of Field I see a massive perfomance hit.

Screen Resolution: 4k

Refresh Rate - 59

Display Mode - Full screen

Field of View - Default

Frame Rate: Variable

V-Sync ON

Rendering - Normal

Resolution Scaling - 1.0

Texture Quality - Very High

Texture Filtering -Very High

Mesh Quality - Very High

Anti-Aliasing - FXAA+TAA

Motion Blur - OFF

Effects Rendering - High.

Depth of Field - OFF

Shadow Quality - High.

Dynamic Shadow - ON

Shadow Cache OFF

Ambient Occlusion - SSAO

Whats your reflections setting? Dropping to variable made a difference for me. Plus, it looks better, as full reflections cause all kinds of horrible artefacts.

Kuranghi

Member

Assuming that you are not hitting a performance target (e.g. 60 FPS frame limiter / V-Sync), having your GPU being under-utilized - especially to the point where the GPU is downclocking - is typically a sign of a big CPU bottleneck.

With a 2500K at 4.5GHz I had issues with that happening in nearly every big game released in 2016.

I upgraded from a 960 to a 1070 and the only thing which changed in those sections of games is that the GPU usage dropped further while performance remained the same.

I can't wait for Ryzen to be released so that I can either upgrade to it or a 7700K, depending on the price/performance offered.

I'm on 3770K at 4.2ghz so I think you are right I am bottlenecked in a number of recent games, but how does that explain the GPU running at 900mhz, me getting 40ish fps in a game, then (I know Prefer Max Perf doesnt always force the core/mem clocks to the EVGA boost values - 1329 in my case - but thats my experience here) forcing Prefer Max Perf, watching the core clock go to the boost value of 1329 in Afterburner, then going back in-game and getting a locked 60 fps after that?

My CPU is still 4.2ghz, whats changed?

Patch #1 Release Notes (Jan. 27th)

Community Announcements - WBacon [capcom]

The following issues have been addressed in today's update:

- Fixed an issue where saving game progress was no longer possible if the player deleted the local save file while Steam Cloud has been turned off.

- Fixed an issue where HDR mode is turned back to ON upon app launch if the player quits the prior game session in full screen mode and with HDR set to OFF.

Sweet. That HDR bug was very annoying

Whats your reflections setting? Dropping to variable made a difference for me. Plus, it looks better, as full reflections cause all kinds of horrible artefacts.

Not seeing any artifacts it just drops occasionally. It's set to High I believe.

If that's what is happening on your system - where forcing it into a higher power state is improving performance, and that significantly - it sounds like something has gone wrong and is preventing the GPU from boosting properly.I'm on 3770K at 4.2ghz so I think you are right I am bottlenecked in a number of recent games, but how does that explain the GPU running at 900mhz, me getting 40ish fps in a game, then (I know Prefer Max Perf doesnt always force the core/mem clocks to the EVGA boost values - 1329 in my case - but thats my experience here) forcing Prefer Max Perf and getting a locked 60 fps after that?

My CPU is still 4.2ghz, whats changed?

I'm not really sure what might fix that.

What typically happens is that, if the CPU isn't fast enough to prep data for the GPU, the GPU is just sitting idle unable to do any more work.

When the GPU is idle or has a low workload, it will reduce the clockspeed.

Forcing the GPU to run at its max boost clock shouldn't affect performance at all in that situation because the issue is that the CPU doesn't have any more work prepared for it to do, not that the GPU is too slow at completing that work.

The way the reflections are sampled is very "noisy". If you look at the floorboards in the lower-right of this video it's pretty noticeable.Not seeing any artifacts it just drops occasionally. It's set to High I believe.

Sanctuary

Member

Looks like it still happens in the full game. (gfy link)

I should have recorded this in 720p because their compression hides a lot of the problems with SMAA.

Is no-one else seeing this?

As I said before, it gets worse the higher the framerate is. (120 FPS in the video again)

I can't believe they didn't include a sharpening filter for the TAA option in the full game either.

---

For the people complaining about black crush and banding in HDR: it's not just HDR which is affected.

The black crush almost seems to be an artistic decision to intentionally reduce what you can see.

Here's the game running in SDR at the default brightness in sRGB:

This isn't the issue I'm talking about. The banding in the white image is extremely subtle to what I see. On my screen, the banding is more like this when using HDR (it's less pronounced in SD, but still not quite as good as your shot):

The point is, in HDR it should be even more subtle than in your shot, not more pronounced.

In terms of black crush, that scene isn't really a good example, because it actually seems obviously intended. When black crush is truly happening, it's easy to tell. There's also a difference of black levels in sRGB and BT.709 when playing in SD.

Patch #1 Release Notes (Jan. 27th)

Community Announcements - WBacon [capcom]

The following issues have been addressed in today's update:

- Fixed an issue where saving game progress was no longer possible if the player deleted the local save file while Steam Cloud has been turned off.

- Fixed an issue where HDR mode is turned back to ON upon app launch if the player quits the prior game session in full screen mode and with HDR set to OFF.

Well that's good news. Now I can actually pick my poison. Although I wonder if it also resets to your DEFAULT settings for SD instead of the whateverthefuck it was doing to the brightness and potentially gamma when switching. That was really annoying.

edit: Well, the selection works, but the game still fucks with your display settings. Having it set to non HDR will screw up the brightness, and it seems like the gamma is out of whack. Changing the color space doesn't really fix it, and lowering the brightness to the lowest possible setting in the game menu and then even lowering it on your TV still doesn't look right. You get a washed out look coupled with shadow detail that looks blotchy and "painted on". Exiting the game and then restarting with it with HDR off doesn't correct the issue.

I didn't mean to suggest that it was the same, just that banding is still a problem for the game in SDR too, so it's a general problem for the game/engine that is not limited to HDR.This isn't the issue I'm talking about. The banding in the white image is extremely subtle to what I see. On my screen, the banding is more like this when using HDR (it's less pronounced in SD, but still not quite as good as your shot):

The point is, in HDR it should be even more subtle than in your shot, not more pronounced.

Here's a more obvious example from when I brought up the pause menu.

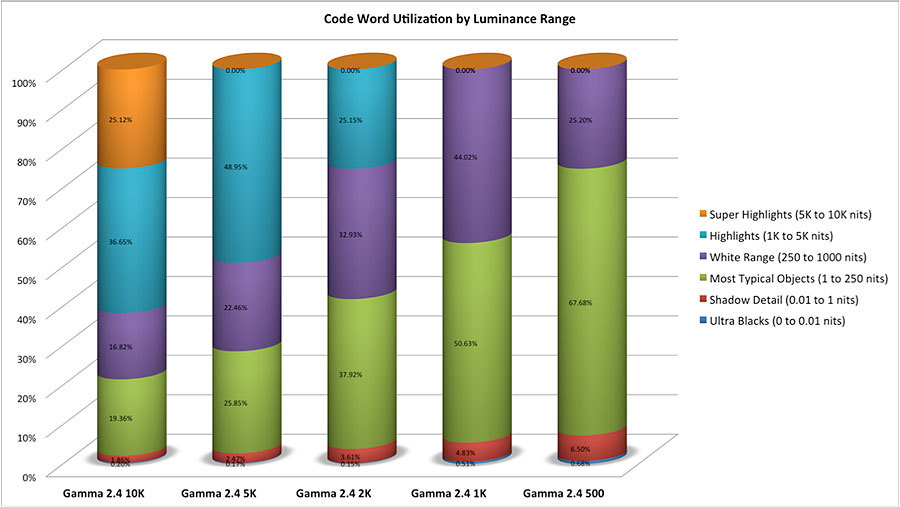

However, HDR is not going to have less banding in bright areas. If anything, HDR is going to have more banding.

With gamma-encoded content (SDR) all of your bits are "wasted" on the bright tones, and you have fewer and fewer steps available as you near black.

With PQ-encoded content (HDR) it shifts gradation away from the highlights so that you have more bits available for shadows and mid-tones.

And LG's OLEDs are known to have issues with banding in highlight regions - even the $20,000 77" model.

I assume that the game only allows you to enable HDR in Fullscreen mode, but if it still gives you the option to use it in Borderless Windowed Mode, you should avoid that.

Unless HDR is treated differently, Windowed Mode is limited to 8-bit color while Fullscreen Mode is capable of outputting > 8-bit color.

Sanctuary

Member

I assume that the game only allows you to enable HDR in Fullscreen mode, but if it still gives you the option to use it in Borderless Windowed Mode, you should avoid that. Unless HDR is treated differently, Windowed Mode is limited to 8-bit color while Fullscreen Mode is capable of outputting > 8-bit color.

It only allows it in Fullscreen. I still don't believe that it's actually outputting in 10-bit though. I can't even select 10-bit from the Nvidia control panel options unless I'm using 4:2:2 or 4:2:0. Both of which screw with the colors and are incorrect. RGB can't use more than 8-bit either, and I have to have that selected. Unless when the game switches to HDR, and it doesn't actually care what you have your video card set to bit wise and overrides it, it's only outputting in 8-bit for me. Although that shouldn't be possible simply due to bandwidth limitations.

I think maybe the issue I'm having wrapping my head around banding with HDR is that I'm not just thinking of the highlights in brighter and darker areas, but also the wider color gamut that should be along for the ride. But I guess since it's possible to use HDR without the wider color gamut, I shouldn't assume that it is. With the wider color gamut, there should be less banding.

flipswitch

Member

I decided to try out reshade mod to counter the blur caused by TAA. Very easy to setup, just added 'lumasharpen' put it to around 2.00 or so, made a big difference to image quality.

There's a new patch out as well. Fixes a few things,

Edit: I see it's been posted already.

There's a new patch out as well. Fixes a few things,

Resident Evil 7- Patch #1 Changelog:

Fixed an issue where saving game progress was no longer possible if the player deleted the local save file while Steam Cloud has been turned off.

Fixed an issue where HDR mode is turned back to ON upon app launch if the player quits the prior game session in full screen mode and with HDR set to OFF.

Edit: I see it's been posted already.

I've been posting my SLI findings in here, curious if you guys have done any testing.

In particular I'd like to find a solution for the banding/flickering problem that occasionally showed up, and better options for AA. SMAA wasn't cutting it but I couldn't get any kind of forced AA to work. TAA goes all bonkers with SLI.

Yeah TAA seems broken in SLI. Will just have to roll with SMAA at 4k

Switching between RGB and YCC shouldn't have a noticeable effect on color reproduction.It only allows it in Fullscreen. I still don't believe that it's actually outputting in 10-bit though. I can't even select 10-bit from the Nvidia control panel options unless I'm using 4:2:2 or 4:2:0. Both of which screw with the colors and are incorrect. RGB can't use more than 8-bit either, and I have to have that selected. Unless when the game switches to HDR, and it doesn't actually care what you have your video card set to bit wise and overrides it, it's only outputting in 8-bit for me.

The only change I might expect would be the levels switching from full to limited.

Dropping from 4:4:4 to 4:2:2 or 4:2:0 shouldn't affect color reproduction either, only chroma resolution.

If your video card is in a mode which doesn't support >8-bit color, then I would expect that the game outputs 10-bit or whatever it uses and the GPU converts that down to 8-bit.

I wouldn't recommend that.

You should be using a mode that supports at least 10-bit color for HDR.

Wider color gamuts can also make banding more noticeable, as the difference between two steps in color is larger. Bit-depth also needs to increase with gamut.I think maybe the issue I'm having wrapping my head around banding with HDR is that I'm not just thinking of the highlights in brighter and darker areas, but also the wider color gamut that should be along for the ride. But I guess since it's possible to use HDR without the wider color gamut, I shouldn't assume that it is. With the wider color gamut, there should be less banding.

Let's say that color gamut is represented by a ruler. Bit-depth would be the markings on that ruler.

The standard gamut is a 12" ruler, and 8-bit color gives it one marking every inch, dividing it into twelve steps.

Now let's say that we double the width of that gamut.

It stretches the ruler out to be twice that length, so it's now a 24" ruler.

If bit-depth remains at 8-bit there are still only twelve markings on the ruler, so each step is now 2" apart.

Increasing the gamut without changing the bit-depth has halved the precision inside it - so banding is going to become more noticeable.

What we need to do is divide the ruler into more sections.

Increasing the bit-depth from 8-bit to 10-bit results in 4x as many steps, so there are now 48 markings on the ruler.

But since the ruler is twice as long now, it means that every step is 0.5" apart.

This ends up better than the standard gamut's 1" steps, but only 2x better, not 4x. That may still not be precise enough to avoid banding entirely.

PRBoricua

Member

Does anybody know what option can get rid of the ... for lack of a better word ... "artifacting" that I'm seeing? It looks like white dots. Almost like confetti or something, it seems to show up in areas that are supposed to be wet, like blood, or any water. Although I see them to a lesser extent in other places too. It's very distracting.

Man, vsync, double buffering, triple buffering, adaptive vsync. So confusing.

Can someone explain this to me: why will the framerate be halved with double buffering and vsync if the FPS drops below 60, but not when triple buffering? I'm trying to picture it in my mind, but I don't get it. Should I always use triple buffering? What type of buffering is the vsync in the RE7 options using? Should I cap at 30 with vsync on instead of letting it hover at around 40 when setting framerate to "Variable"?

Can someone explain this to me: why will the framerate be halved with double buffering and vsync if the FPS drops below 60, but not when triple buffering? I'm trying to picture it in my mind, but I don't get it. Should I always use triple buffering? What type of buffering is the vsync in the RE7 options using? Should I cap at 30 with vsync on instead of letting it hover at around 40 when setting framerate to "Variable"?

Is anyone getting slower transitions alt-tabbing in and out of games after installing the newest Nvidia driver?

I have a 980Ti.

borderless windowed?

The Janitor

Member

Can someone explain this to me: why will the framerate be halved with double buffering and vsync if the FPS drops below 60, but not when triple buffering? I'm trying to picture it in my mind, but I don't get it. Should I always use triple buffering?

Yep, always use triple buffering.

Compsiox

Banned

borderless windowed?

It wasn't like this before I installed the newest Driver.

I prefer fullscreen.

Double buffering always syncs the framerate to divisors of your refresh rate.Can someone explain this to me: why will the framerate be halved with double buffering and vsync if the FPS drops below 60, but not when triple buffering?

So if you have a 60Hz screen that is 60/30/20/15 etc.

If you have a 144Hz screen that is 144/72/48/36 etc.

With triple buffering, the framerate isn't synced with the refresh rate when it's anything less than it.

Frame presentation is synced to the refresh rate so that there isn't any tearing though.

If you have an NVIDIA GPU and you want to lock a game to 30 FPS, I would suggest setting V-Sync to Adaptive (Half-Refresh Rate) in the NVIDIA Control Panel.

That uses double buffered V-Sync (1 frame less lag than triple buffering) and allows it to tear if the framerate drops below 30.

Setting the maximum pre-rendered frames to 1 further reduces lag with V-Sync on.

Never set these on the global profile, set them on the game profile.

This assumes that the game is at 30 FPS the majority of the time though. If it's constantly dropping below 30 I guess triple buffering might be the best option.

As expected, my 6700k/1080 is tearing through this.

Max settings except AA at TAA only, at 1.7 resolution scaling. HBAO+ enabled. No drops under 60fps so far, mostly hovering in the high 60s to 80s. Even 1.8x is good for >60fps 99% of the time.

This game seems to run particularly hot though, and my GPU fan profile struggles to keep temps on target. My boost suffers as a result, flitting between 2000-2025 MHz rather than the stable 2038 I see in other games. Whacking the fans up isn't an option in a game like this.

Only encountered one loading hitch so far, going between a gated area outside near the caravan.

Max settings except AA at TAA only, at 1.7 resolution scaling. HBAO+ enabled. No drops under 60fps so far, mostly hovering in the high 60s to 80s. Even 1.8x is good for >60fps 99% of the time.

This game seems to run particularly hot though, and my GPU fan profile struggles to keep temps on target. My boost suffers as a result, flitting between 2000-2025 MHz rather than the stable 2038 I see in other games. Whacking the fans up isn't an option in a game like this.

Only encountered one loading hitch so far, going between a gated area outside near the caravan.

Sanctuary

Member

Switching between RGB and YCC shouldn't have a noticeable effect on color reproduction.

The only change I might expect would be the levels switching from full to limited.

Dropping from 4:4:4 to 4:2:2 or 4:2:0 shouldn't affect color reproduction either, only chroma resolution.

When having it in 4:2:2 and I start the game, when the Steam overlay pops up, where there would be white lettering and the steam symbol, it becomes a bright reddish orange. In game text is all screwed up too with every few letters, or parts of letters being the same discoloration. In 4:2:0 I don't get a quite as pronounced issue, but I still see what looks like chroma issues in white, where you can see bits of red, green and blue in some solid white letters, or every few letters are a solid orange. That's not right at all. Having it in 8/10/12 bit doesn't change this.

If your video card is in a mode which doesn't support >8-bit color, then I would expect that the game outputs 10-bit or whatever it uses and the GPU converts that down to 8-bit. I wouldn't recommend that.

You should be using a mode that supports at least 10-bit color for HDR.

See above. There's also no appreciable change in highlights or shadow detail. Everything looks the same as in RGB Full/8-bit.

Wider color gamuts can also make banding more noticeable, as the difference between two steps in color is larger. Bit-depth also needs to increase with gamut.

This is exactly what I've been assuming all of this time, and I've even said that it's as if the TV is expecting 10 bit, but only getting 8, which is why it's looking the way it does. I've been saying "HDR" as a generic catch-all, but as I previously said, that was assuming the wider color gamut was also being used. As for your explaination, I knew that, which is why I thought the uncompressed color information wasn't being sent, when whatever was expecting to display it.

Also, I think the overall implementation of HDR in this game (on PC at least) is not quite right. At times it comes off like the old software based HDR in games like Half-Life 2 and Oblivion where sometimes objects would for no reason become blazingly, unnaturally bright as though they are emitting a light source instead of reflecting one. Either that, or the LG OLED just isn't doing it correctly at all.

In the same area at the start in your second screenshot where the lamp is...

1. There's no light source below the lamp. There's a very dark shadow, which makes zero sense.

2. As you near the door, it looks about how it should with the light from the lamp illuminating it. However, when you get just between the lamp and the door and slowly turn towards the door, its luminance skyrockets for absolutely no reason. Similarly, if you look at the lamp at most angles, it looks fine, but then if you hit a certain angle, it also goes super nova for no reason. After this happens, if you quickly turn around and look at the area outside of the porch, it too all suddenly got brighter.

You could argue that this one area is just an outlier, but do we know that? If it's happening here, how do we know it's not being screwy elsewhere? I can honestly say that the only area in the game where any supposed HDR implementation "wowed" me is at the kitchen table when the lights are still out. In fact, the way the game looks without HDR doesn't even make any sense, because the room is bright enough to where candles wouldn't even be used. With HDR enabled, it looks very natural and the area with the refrigerator and sink looks fantastic. Elsewhere though? Not really noticeable aside from the game being overall more dark and a bit more atmospheric. That however could have been done in non HDR mode, because it looks that way in the demo on PC.

Hmm, I see. Thanks for the info! Should I then still cap it to 30 or leave it on "Variable" in-game when I chose Adaptive+Half-Refresh Vsync in Nvidia settings?Double buffering always syncs the framerate to divisors of your refresh rate.

So if you have a 60Hz screen that is 60/30/20/15 etc.

If you have a 144Hz screen that is 144/72/48/36 etc.

With triple buffering, the framerate isn't synced with the refresh rate when it's anything less than it.

Frame presentation is synced to the refresh rate so that there isn't any tearing though.

If you have an NVIDIA GPU and you want to lock a game to 30 FPS, I would suggest setting V-Sync to Adaptive (Half-Refresh Rate) in the NVIDIA Control Panel.

That uses double buffered V-Sync (1 frame less lag than triple buffering) and allows it to tear if the framerate drops below 30.

Setting the maximum pre-rendered frames to 1 further reduces lag with V-Sync on.

Never set these on the global profile, set them on the game profile.

This assumes that the game is at 30 FPS the majority of the time though. If it's constantly dropping below 30 I guess triple buffering might be the best option.

Spooky Scary Skeleton

Member

The game is borderline unplayable on my buddy's GTX 970 PC.

The game is borderline unplayable on my buddy's GTX 970 PC.

Turn off Shadow Cache

Set textures to medium or high

Set reflections to variable

Dynamic Lighting is also a culprit, but when turned off you lose some of the atmosphere

Set AO to SSAO variable

That kinda fixed it for me!

nkarafo

Member

Game should run like a dream on max settings on a 970. He shouldn't have to lower anything to medium.Turn off Shadow Cache

Set textures to medium or high

Set reflections to variable

Dynamic Lighting is also a culprit, but when turned off you lose some of the atmosphere

Set AO to SSAO variable

That kinda fixed it for me!

lordfuzzybutt

Member

Game should run like a dream on max settings on a 970. He shouldn't have to lower anything to medium.

Max settings? How so? The 970 is not a magic card, having this line of thought is absurd.

Game should run like a dream on max settings on a 970. He shouldn't have to lower anything to medium.

Yeah, well.....Shoudlda coulda woulda, unfortunately. Most likely a VRAM issue.

Intro and demo run like a dream but once you reach a certain point...