Aceofspades

Banned

Does Sony or MS have to opt for APU designs to maintain BC compatibility? Cant they used seperate AMD CPU and GPU combos?

Does Sony or MS have to opt for APU designs to maintain BC compatibility? Cant they used seperate AMD CPU and GPU combos?

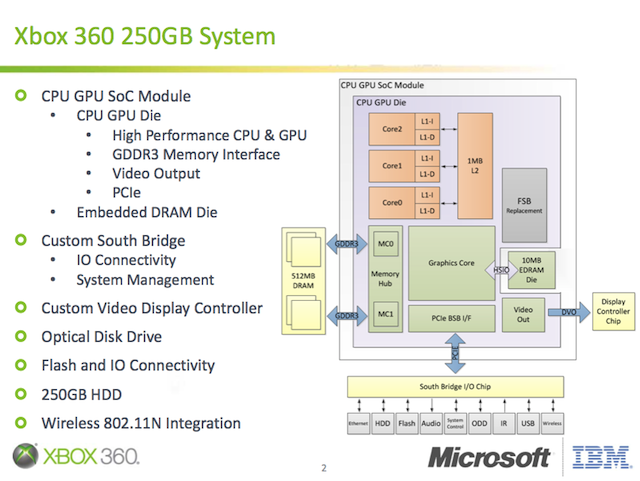

Wasn't there an inherit performance advantage of having CPU and GPU on the same die?Unclear...Remember when Microsoft made the 360 Slim as a single chip, they actually had to artificially nerf the latency between the two to ensure 100% compatibility? Even with a high bandwidth bridge between the two, it's possible the latency would cause some edge case issues.

Though this also depends on low level vs portable GNMX and DX for Xbox are.

Wasn't there an inherit performance advantage of having CPU and GPU on the same die?

But the 360 slim wasn't even on the same die afaik.360 Slim

GNMX is the potato higher level api though, GNM is where is at for most AAA i would think.Again I have no insight into how portable GNMX

But the 360 slim wasn't even on the same die afaik.

GNMX is the potato higher level api though, GNM is where is at for most AAA i would think.

Damn i just googled it, im having the biggest case of mandela effectThey called it the CGPU before APU was the hawt term

Damn i just googled it, im having the biggest case of mandela effect

I remember the forums discussions and articles, that it combined everything into a single package but cpu and gpu retained individual dies.

There is no mandatory need for pure hardware BC. We are talking x86 to x86, so the hardware can easily be virtualized, whether by APU or CPU+GPU (assuming some sort of shared memory pool for the later, otherwise things get more complex).Does Sony or MS have to opt for APU designs to maintain BC compatibility? Cant they used seperate AMD CPU and GPU combos?

Unclear...Remember when Microsoft made the 360 Slim as a single chip, they actually had to artificially increase the latency between the two to ensure 100% compatibility?.

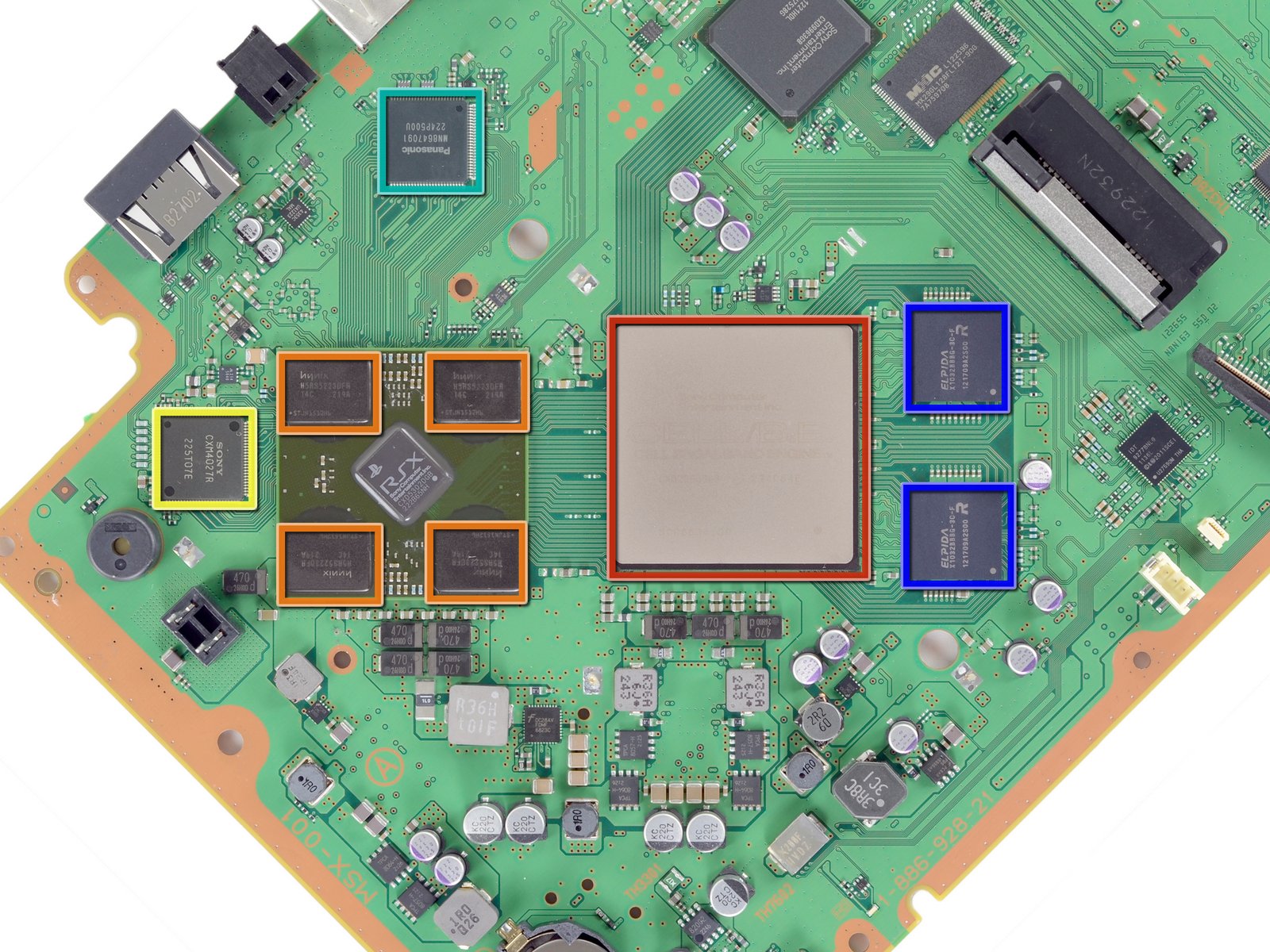

Sony had also combined Cell and RSX into one chip in latest PS3 models to cut costs and improve thermals. Its one hell of engineering achievement given the complexity of Cell and the memory system of the PS3.

An ultra-high-speed SSD is the key to our next generation. Our vision is to make loading screens a thing of the past, enabling creators to build new and unique gameplay experiences.

Playing Days Gone I welcome this.

Sony: An Ultra High-Speed SSD Is The Key to Our Next-Gen, Making Loading Screens a Thing of the Past

A Sony spokesperson shared a new statement on the PS5, stating that the ultra high-speed SSD is the key to the next-generation hardware.wccftech.com

I remember reading it somewhere in 2012, not sure if its combining Cell and Rsx or shrinking them to smaller node.They did? This was the Super Slim, also I remember the CPU and GPU ended on offset fab generations

PlayStation 3 technical specifications - Wikipedia

en.wikipedia.org

Damn! They are hyping really hard this ssd thing. Maybe the rumors about Sony getting a *very* good HBM deal was actually referring to NAND storage? Zen 2 + PCIe 4.0 will bring insane speeds... if Sony somehow managed to get SSD goodness for a great price...Playing Days Gone I welcome this.

Sony: An Ultra High-Speed SSD Is The Key to Our Next-Gen, Making Loading Screens a Thing of the Past

A Sony spokesperson shared a new statement on the PS5, stating that the ultra high-speed SSD is the key to the next-generation hardware.wccftech.com

The amount they are pushing "making load screens a thing of the past" is making the hybrid solution seem less likely imo. If they had a rust drive and a flash storage part that just pulled your most used games in, loading a game from the hard drive part would be even more painfully slow than already with a generation designed for flash from the ground up. But then I still have to wonder about external drive support if that was the case.

Then again again, we spent most of the 8th gens life without external drive support on the PS4.

I'm curious how Sony is planning on "making loading screens a thing of the past"?

I know they are talking about some very fast SSD and that will definitely help, but faster loading and no loading are different and there's also a point where faster SSD's don't seem to help anymore. ( for game loading speeds )

On PC moving from HDD to SSD makes a huge difference in loading times. But for SOME REASON moving from SSD to NVMe makes almost no difference at all. You basically save ONE SECOND despite the NVMe being 5x faster than the fastest sata based SSD.

There's another bottleneck in the system somewhere, but I don't know where it is. Maybe the move from PCIe 3.0 to 4.0 will improve this situation?

Another interesting part is the example Cerny gave with being able to move faster in games as the world can build out quicker which to me hints at frame rates also getting a decent boost.

Maybe. I thought he was talking about asset streaming speed in open worlds. This can be a problem on an old HDD. If anything hints at faster framerates it's the HUGE CPU upgrade. Sure not all games are CPU bound, but if there is such a thing as an average framerate for this gen I think it's definitely going to be higher next gen.

Well god of War didn't have a single loading screen during the game. And it was linear but to have something remotely similar in an open world game is amazing.I'm curious how Sony is planning on "making loading screens a thing of the past"?

I know they are talking about some very fast SSD and that will definitely help, but faster loading and no loading are different and there's also a point where faster SSD's don't seem to help anymore. ( for game loading speeds )

On PC moving from HDD to SSD makes a huge difference in loading times. But for SOME REASON moving from SSD to NVMe makes almost no difference at all. You basically save ONE SECOND despite the NVMe being 5x faster than the fastest sata based SSD.

There's another bottleneck in the system somewhere, but I don't know where it is. Maybe the move from PCIe 3.0 to 4.0 will improve this situation?

Well god of War didn't have a single loading screen during the game. And it was linear but to have something remotely similar in an open world game is amazing.

Wouldn’t frame rate be a potential bottle neck to your movement?

Everyone always mentions needing 60fps for twitch shooters which both examples would require fast (smooth) movements putting them in the same ball park.

I'm talking loading screens. Yes it was masked and there might have been a minor slow down but there wasn't a single loading screen during the game.The loading is masked.

When your travelling between realms everything slows down so when you exit the door the new area exists.

Also GoW has loading when you boot up the game. Sony is talking about removing all of that.

On PC, games that stream alot of assets are helped a ton by SSD'sAnother interesting part is the example Cerny gave with being able to move faster in games as the world can build out quicker which to me hints at frame rates also getting a decent boost.

That's great. I hope it isn't proprietary though, then replacing with another means Sony selling us expensive SSDs. Or maybe on the other hand they sell like hot cake and become widespread and a success like Blu-ray that everyone wants one.Playing Days Gone I welcome this.

Sony: An Ultra High-Speed SSD Is The Key to Our Next-Gen, Making Loading Screens a Thing of the Past

A Sony spokesperson shared a new statement on the PS5, stating that the ultra high-speed SSD is the key to the next-generation hardware.wccftech.com

Because SONY need millions of GPU's they probably get very good price from AMD, and on top of that they only need to buy chip from AMD without PCB, memory.But that's buying a single graphics cards from a retailer. There's so many points in that chain where entities are taking a cut and thereby elevating the price.

How much would those Radeon VIIs cost if you bought 20 million (or more) of them, directly from AMD, and paid for them upfront? I can tell you right now it's going to be a lot less than $700. Probably even less if there's no sale-or-return clause in the contract.

Just to illustrate, a tear down of the iPhone XS Max (~$1250) revealed that the components cost ~$450. What's the cost if you bought a few million of those components in bulk? You could maybe halve the total bill if you include things like tax deductions for businesses.

1. If the consoles actually have ray tracing hardware, it will be pretty limited. Mid gen refreshes could increase the ray tracing power.

2. The things I have mentioned aren't that creative because I am a stupid person, but devs are smart and talented and they will find ways to make things possible that weren't before or only in limited form. If you brake it down, yeah it is just loading assets faster, but it is an order of magnitude never before seen. Your comparison to PC doesn't work because basically every game is designed around the constraints of 5200rpm hard drives that spin in the consoles, but if ALL next gen consoles have NVME4.0 the engines will adapt to that and allow for new kinds of magic. What PCs with SSD do is just load games that are designed around HDDs faster and that's, they aren't really utilizing it. No game is built around calling 5 gigs of assets in a second, next gen, they will.

Look at it this way, there is a reason why ALL games must be installed to the HDD of modern consoles even though this means that it takes much longer to get into the game when you start it the first time. Having faster access speeds gives devs more freedoms to create cool stuff. Otherwise games would still draw assets from the disc itself.

The PlayStation 4’s Blu Ray disk speed is rated at 6x, and a maximum reading speed of about 27 MB/s. 5400 RPM drives offer an average of 100 MB/s read. Some users here suggested that the read speed of a pcie 4.0 nvme might be up to 8gb/s. That is pretty insane.

https://www.pcgamesn.com/ssd-pcie-4-amd-3rd-gen-ryzen

So if my math is correct we might be looking at a read speed increase in next gen consoles of up to 80, read E-I-G-H-T-Y, times faster. According to Cerny it's faster than anything on PC. The fastest on PC are 4gb/s so we will at least get 5gb/s which is still 50 fucking times faster than the hdd in the PS4.

Post copied from Reset:

yes but it is for servers, and won't be on desktop for many yearsIsn't PCIe 5.0 finalized at this point?

Edit: nevermind, that's ~12TF.Would the PS5 have something like this in it?

Radeon Instinct™ MI25 Accelerator

Superior FP16 and FP32 performance for machine intelligence and deep learning.

Compute Units: 64

Stream Processors: 4096

Peak Half Precision (FP16) Performance: 24.6 TFLOPs

Peak Single Precision (FP32) Performance: 12.29 TFLOPs

Peak Double Precision (FP64) Performance: 768 GFLOPs

Memory Size: 16 GB

Memory Type (GPU): HBM2

Memory Bandwidth: 484 GB/s

Yea. These Instinct are made for servers, but I was curious because the specs match the rumors we see. It's on 14nm though.Edit: nevermind, that's ~12TF.

800GB/s

My hopesDamn imagine a Navi version of this for PS5

56 CU (4 disabled)

12.5TF

24GB GDDR6 384bit bus

800GB/s

Believe

and dreamsMy hopes

People just think HBM is hip and cool, yo.HBM tech would mean less memory and less bandwidth

The opposite of what you want lol

People just think HBM is hip and cool, yo.

What's gddr6 bandwidth?HBM tech would mean less memory and less bandwidth

The opposite of what you want lol

What's gddr6 bandwidth?

No you dope.Isn’t that what the H stands for?

Hip Badass Memory

I remember reading it somewhere in 2012, not sure if its combining Cell and Rsx or shrinking them to smaller node.

Bandwidth is surprisingly low considering its using 16GB HBM2. Any ideas about the big drop off compared to Radeon 7?