-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

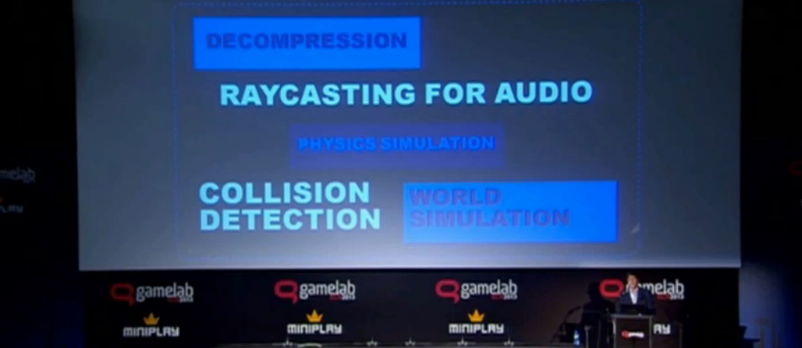

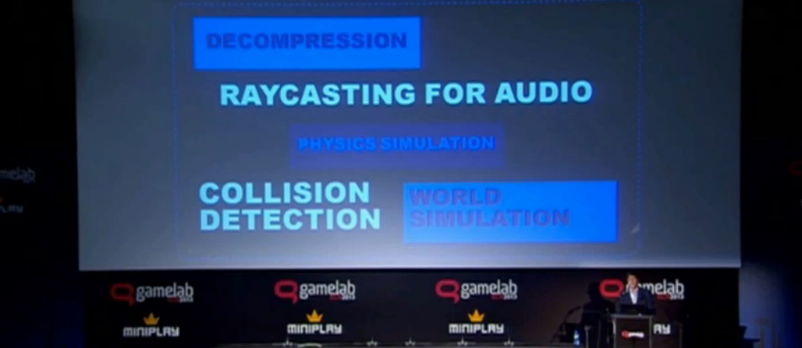

Mark Cerny speaking on stage about the PS4

- Thread starter Roxas

- Start date

I agree with a lot Sony is doing but software for the launch lineup is not one. I think they blew too much on PS3 this year and should have saved some games for PS4. The Last of Us or GT6 would have been great at launch. Thought for sure we would get at least a cross platform announcement.

But the entire launch lineup hasn't even been revealed yet.

Yes I did listen to what he said and yea when he was describing to the two methods they considered for bandwidth he was speaking to graphics capabilities. We're you listening? Also no I was not at E3 but in terms of what I saw I was not overly impressed. Not so much visually but from a performance side many of the games were lacking dropping below 30fps frequently.

Then I guess it's a good thing most of those games are damn near alpha, and most devs were shocked in February to discover the PS4 would be getting 8GB GDDR5 RAM, since they had been coding for much less.

Please link?Superior no hiss version is up I see.

LiquidMetal14

hide your water-based mammals

I agree with a lot Sony is doing but software for the launch lineup is not one. I think they blew too much on PS3 this year and should have saved some games for PS4. The Last of Us or GT6 would have been great at launch. Thought for sure we would get at least a cross platform announcement.

I agree with you here that their launch lineup for the PS4 isn't the greatest either in terms of exclusives, but I guess that there is still Gamecom and TGS for them to reveal a couple more games for that time period.

Holy god, it's never wise to judge a game's technical performance based on E3 demos. I was at E3 in 2001 and Halo was practically a slideshow. And Kameo looked smooth and beautiful on the Gamecube! And two years ago Prey 2 was my Game of the Show!

E3 demos are great for giving you a rough indication of how the game will play and what the developer is going for, but that's really about it.

E3 demos are great for giving you a rough indication of how the game will play and what the developer is going for, but that's really about it.

But the entire launch lineup hasn't even been revealed yet.

Why the fuck can't people understand this... Sony didn't reveal all their games because they knew they only needed the DRM and price card to win E3. Wait for Gamescom and TGS... Jesus...

Yes I did listen to what he said and yea when he was describing to the two methods they considered for bandwidth he was speaking to graphics capabilities. We're you listening? Also no I was not at E3 but in terms of what I saw I was not overly impressed. Not so much visually but from a performance side many of the games were lacking dropping below 30fps frequently.

You dissatisfaction with design choices and priorities and progress made by developers has little to do with the philosophies Cerny discusses in the creation of the PS4. So GG are going with 30fps, Drive Club aint performing well 4/5 mths out of launch. Don't pin that on Cerny. There are already other threads to voice for premature concerns.

Gaming Nostalgia

Banned

This is my hope. I just keep going back to Knack and scratching my head though.Then I guess it's a good thing most of those games are damn near alpha, and most devs were shocked in February to discover the PS4 would be getting 8GB GDDR5 RAM, since they had been coding for much less.

Can Crusher

Banned

I wonder what kind of embedded ram Sony was thinking about using. The esram in XboxOne has a 10th of the speed.

Gaming Nostalgia

Banned

Definitely not trying to pin anything on Cerny. I'm just more confused than anything considering this amazing "tool" they've been given and the progress and design choices that have been made.You dissatisfaction with design choices and priorities and progress made by developers has little to do with the philosophies Cerny discusses in the creation of the PS4. So GG are going with 30fps, Drive Club aint performing well 4/5 mths out of launch. Don't pin that on Cerny. There are already other threads to voice for premature concerns.

How long has shu been in charge of scej?

He's not head of SCEJ, he's head of Worldwide Studios which encompasses studios from Japan, Europe and America.

Almost done with the video. It really is an interesting presentation.

So far the most interesting tidbit, if i understand correctly, is that Sony engineers used Cerny, a Quantum mechanics student at Berkeley by age 18, to benchmark broad Programmer competence (in the States?), during the very early stages of PS3 development.

So far the most interesting tidbit, if i understand correctly, is that Sony engineers used Cerny, a Quantum mechanics student at Berkeley by age 18, to benchmark broad Programmer competence (in the States?), during the very early stages of PS3 development.

GribbleGrunger

Dreams in Digital

Definitely not trying to pin anything on Cerny. I'm just more confused than anything considering this amazing "tool" they've been given and the progress and design choices that have been made.

I think you are taking for granted the fact that Knack can grow bigger and that every single piece that makes him bigger has it's own physics. And don't forget, all those pieces have to work together perfectly to create a realistic animation. That's for the tech heads though. As Cerny said himself, he wanted to make sure that the family had something to play too, and let's face it Nintendo prove that the family don't really care about impressive tech, just FUN

PapaJustify

Member

Legendary.

GANGSTERKILLER

Member

BOW BEFORE ME http://youtu.be/ns21_nMzVFM?t=2m35s

Thanks! I've watched this whole video and my respect for Mark Cerny has only grown. One of the best gaming related interviews I've ever seen.

What a brilliant passionate human being he is! It really gives me a good feeling knowing Mark Cerny is THE man behind the PS4.

I trust him completely. I hope he never leaves Sony.

Awesome. Thanks!

babyghost853

Member

eDRAM wouldn't have meant a more expensive APU at all, nor would it have implied a weaker one. The point being that you cannot currently manufacture eDRAM on a 28nm process node, as nobody does it. The smallest node for eDRAM currently is 32nm which would have implied a daughter die like the 360 setup - perhaps with the GPU ROPS on the daughter die also.

EDRAM at 32MB would have meant a much smaller die area than eSRAM at 32nm. It would have allowed Sony to go up to 64 or possibily even 128MB of eDRAM on the daughter die. Would have been far superior performance wise than MS' current XB1 setup, provided they had double or more embedded memory and in the terabits per second internal bandwidth to the ROPs. Just would have been more expensive.

The thing with eDRAM is that it requires special manufacturing steps and thus cannot be fabbed at every major fab. This ends up making it more expensive in the long run as you'd be exempt from any kind of ability to shop around and get the best deal at the major foundries. MS chose eSRAM because they wanted a solution that would be integrated on die from the offset. A solution which though it makes your APU more expensive to start with, as it will invariably affect yields (i.e. on-die high transistor density eSRAM adds another level of complexity making your APU even more prone defects), it does allow you to cost reduce your embedded memory alongside your main APU as you transition from one manufacturing process node to the next.

The main problem with a hypothetical PS4 that used eDRAM and a big main pool of DDR3 is that it would have meant a more complex system with a greater potential for performance bottlenecks. There would be no real way to get around the slow main memory bandwidth, which could tank performance for any application that cannot find its data in the finite pools of caches and the embedded memory.

I think the GDDR5 solution Sony chose was a good, solid and wise choice. And I think it is a system that will pay off for them in the long term, moreso than any system Sony has designed to date.

Small correction, the hypothetical PS4 would have used GDDR5 + eDRAM. GDDR5 would have been 128bit bus and not 256bit, as seen here:

Lol at everyone trying to turn it into a dig at MS, it's not.

This setup would have been more complected to program for. They just choose to go with the easier to progrom for model, and added a few things that developers would need to explore as time moves on.

They won't. I've literally lost all faith in the American buying public, especially gamers. I'm calling it: Xbone wins NA by a wide margin.

But I'm doing my part. I just preordered Knack on Amazon.

I hope you are not right, but to be realistic I think you have your point.

Anyway I've already done my part

phosphor112

Banned

I wonder what kind of embedded ram Sony was thinking about using. The esram in XboxOne has a 10th of the speed.

They specifically said eDRAM, and it would have been cheaper too, apparently.

Hi there,

Have you accepted Cerny as your lord and savoir? I have some literature I'd like to share with you...

Sincerely,

NeoGAF

Sony putting people like him charge is one of the primary reasons I'm so incredibly pumped for the PS4. I had gone Xbox and then Xbox 360 over this past decade, but the general direction Microsoft has been heading was making me feel super depressed about gaming in general. What Sony is doing is bringing back that old feeling of "I can't wait to see what's coming next". Instead of "oh god, what now?".

Have you accepted Cerny as your lord and savoir? I have some literature I'd like to share with you...

Sincerely,

NeoGAF

Sony putting people like him charge is one of the primary reasons I'm so incredibly pumped for the PS4. I had gone Xbox and then Xbox 360 over this past decade, but the general direction Microsoft has been heading was making me feel super depressed about gaming in general. What Sony is doing is bringing back that old feeling of "I can't wait to see what's coming next". Instead of "oh god, what now?".

Henry Jones Jr

Member

That was such a fantastic presentation. I couldn't stop listening.

Same here. Would love to get a recording of that 8 hour developer presentation he was talking about.

Ingueferroque

Banned

He's the best spokesperson Sony could have at this point.

Just a shame Knack doesn't seem to be all that.

Just a shame Knack doesn't seem to be all that.

God damn. Cerny almost comes off as arrogant at first, but it's clear he has a fairly high IQ and backs up his calm, yet ambitious, personality.

A ill bit, but then you look at what he's done and he can kinda go there as much as he wants.

DidntKnowJack

Member

Wow that was interesting as hell. I was riveted. And it's kind of refreshing to see a company be so transparent and admit past mistakes.

K

kittens

Unconfirmed Member

Right? I don't think I've ever seen any of the Big 3 be so candid about hardware development.Wow that was interesting as hell. I was riveted. And it's kind of refreshing to see a company be so transparent and admit past mistakes.

FantasticMrFoxdie

Mumber

Whoa. That was a fascinating watch. Cerny is a BEAST.

dream431ca

Banned

Watching this now (15 mins in). Loving it. Cerny is a great speaker.

After watching this video. You know that PS4 was made 4GamersByGamers

No it was made for Game Developers by Game Developers

Wishmaster92

Member

Small correction, the hypothetical PS4 would have used GDDR5 + eDRAM. GDDR5 would have been 128bit bus and not 256bit, as seen here:

Lol at everyone trying to turn it into a dig at MS, it's not.

This setup would have been more complected to program for. They just choose to go with the easier to progrom for model, and added a few things that developers would need to explore as time moves on.

I know it's stupid to say but i would have preferred the eDRAM setup. It's a challenge but so what? Not as challenging as the cell i bet, and it would have future proofed the system that much more. But i can't deny that in the end the ps4 is a beautifully architected system.

He's not head of SCEJ, he's head of Worldwide Studios which encompasses studios from Japan, Europe and America.

oh

so Kawano is to blame for scej then?

Small correction, the hypothetical PS4 would have used GDDR5 + eDRAM. GDDR5 would have been 128bit bus and not 256bit, as seen here:

Lol at everyone trying to turn it into a dig at MS, it's not.

This setup would have been more complected to program for. They just choose to go with the easier to progrom for model, and added a few things that developers would need to explore as time moves on.

MS is using esRAM right? Is it the same as esRAM? Should Sony have chosen the esRAM setup for supercomputer power?

lol

SolidSnakex

Member

oh

so Kawano is to blame for scej then?

His Linkedin shows that he became president in 2010. And since that time SCEJ has started to turn things around.

NullPointer

Member

Couldn't agree more.What Sony is doing is bringing back that old feeling of "I can't wait to see what's coming next". Instead of "oh god, what now?".

Interesting stories, perhaps in hindsight they chose the wrong guy to test out the Cell when building the PS3. The average programmer may have raised concerns about the complexity issue.

That's what I thought too. Interesting that Cerny didn't think of this at the time, goes to show that sometimes even the brightest overlook the bigger picture.