Dictator93

Member

it's not just used for higher resolutions and AA @60fps

It could be quite usefully for bandwidth heavy alpha effects and such.

it's not just used for higher resolutions and AA @60fps

To be frank, I'm not too impressed by Tonga's compression algorithms. Either the card is really unbalanced or something else is up.290 series cards don't have the bandwidth compression stuff from Tonga, right? Likewise, Maxwell 200 series cards have similar bandwidth compression tech. This leads to the situation where at lower resolution, where bandwidth matters less, that 50% bandwidth advantage is unpronunced and negated by the compression tech advantage (something equivalating to 35% more "bandwidth" I believe)"

Maxwell fairing so well with less bandwidth has a lot to do with that compression tech, the 290 series even then fairs better and scalles better at higher resolutions inspite of the bandwidth compression on Maxwell.

Now imagine a card with much higher shading power, bandwidth compression tech from Tonga (aka, Maxwell wouldn't be the only one have this trump card for its bandwidth deficiencies anylonger), as well as HBM with a huge 1024 bit interface. Maxwell could quite conceivably be left in the proverbial dust.

I never said anything about performing worse. Just that its advantage isn't exactly that big of an advantage if its best use cases are for situations where its limited vRAM becomes an issue anyways.So you're thinking really about buying a 290x 8gig then?

http://www.sapphiretech.com/presentation/product/?cid=1&gid=3&sgid=1227&pid=2394&psn=&lid=1&leg=0

Because your key theoretical point rests on having a much faster 4 gig card performing worse than your effectively 3.5gig card at the higher resolutions?

To be frank, I'm not too impressed by Tonga's compression algorithms. Either the card is really unbalanced or something else is up.

That reminds me, does AMD's Shadowplay alternative (I always forget what it's called) support desktop capture now?

I hope no one on GAF is running 4 GPUs of any kind. I wouldn't wish that kind of latency, compatibility issues and frame pacing on anyone.

It does, but in my experience it's not very good. The overlay causes some performance problems when recording.The Gaming Evolved app is Raptr, it supports DVR functionality now, never used it. But I'm sure it supports desktop capture.

In every single thread

At this point one has to wonder why those comments aren't a bannable offence when they'Re mostly used by nVidia fanboys still hung up on how the drivers were 10 years ago

Anyway, what's with the 4gb of ram? With all the talk of "future proofing" around here, you'd think there would be a little more concern with the fact that these cards won't last any longer than the last generation of cards. Yeah, they'll be faster, right up until you hit the 4gb mark and they choke just like every other card. I didn't upgrade to a 980 because it's only 4gb, I'm sure as hell not going to spend even more money to do it in the middle of 2015. Either this is a huge mistake on AMD's part or there will be more ram on these cards and we just don't know it.

There is one issue The 390X will have to deal with from HBM though. First-gen HBM tech limits the card's potential (HBM) VRAM size to 4 GBs. For something as theoretically powerful and well-equipped for high resolution gaming as it, that 4GB limitation might be particularly painful in contrast to "affordable" GM200's likely 6 GBs (or whatever portion of it cut-down GM200s will actually be able to access at full speed...).

I hope AMD implement secondary GDDR5 memory controllers for some additional VRAM or something, but it seems unlikely.

Because in many ways people are telling the truth. We have actual proof in the DX12 thread, you guys just don't like to see it. Why would you ban people for telling it like it is?

http://www.neogaf.com/forum/showthread.php?t=987116&highlight=dx12

Anyway, what's with the 4gb of ram? With all the talk of "future proofing" around here, you'd think there would be a little more concern with the fact that these cards won't last any longer than the last generation of cards. Yeah, they'll be faster, right up until you hit the 4gb mark and they choke just like every other card. I didn't upgrade to a 980 because it's only 4gb, I'm sure as hell not going to spend even more money to do it in the middle of 2015. Either this is a huge mistake on AMD's part or there will be more ram on these cards and we just don't know it.

TechPowerUp:

...1024-bit wide HBM memory interface, offering 640 GB/s of memory bandwidth...

Could you link to said proof? browsed a few pages of that thread and all it's boiled down to is X1 talk at this point.

This is a timely article, especially since we were just discussing here on GAF a few days ago how much worse AMD DX11 drivers are in terms of CPU utilization comapred to NV DX11 drivers. There was no hard data out there, but - at least in my opinion - lots of circumstantial evidence pointing towards "a lot worse". Now it's rather rigorously confirmed.

Because as Serandur said:

I do agree 4gb isn't enough.

Especially as the high end has such a huge bandwidth and gpu power it would be foolish not to downsample many games.

Where are these news sites getting this 640GB/s number? Hynix is currently only providing chips with maximum of 128GB/s bandwidth and four of those only add up to 512GB/s.

Because in many ways people are telling the truth. We have actual proof in the DX12 thread, you guys just don't like to see it. Why would you ban people for telling it like it is?

http://www.neogaf.com/forum/showthread.php?t=987116&highlight=dx12

Because in many ways people are telling the truth. We have actual proof in the DX12 thread, you guys just don't like to see it. Why would you ban people for telling it like it is?

http://www.neogaf.com/forum/showthread.php?t=987116&highlight=dx12

Anyway, what's with the 4gb of ram? With all the talk of "future proofing" around here, you'd think there would be a little more concern with the fact that these cards won't last any longer than the last generation of cards. Yeah, they'll be faster, right up until you hit the 4gb mark and they choke just like every other card. I didn't upgrade to a 980 because it's only 4gb, I'm sure as hell not going to spend even more money to do it in the middle of 2015. Either this is a huge mistake on AMD's part or there will be more ram on these cards and we just don't know it.

But yes, an 8GB 290X is a potential option, but maybe a bit too power hungry for my PSU.

The Gaming Evolved app is Raptr, it supports DVR functionality now, never used it. But I'm sure it supports desktop capture.

Thanks for the answers. By "it does", do you mean it does support desktop shadow recording?It does, but in my experience it's not very good. The overlay causes some performance problems when recording.

I wouldn't bother at all. Hunt down some benchmarks and you'll find a 970 performs to within 5% of an 8GB 290X at 3840x2160 and the 970 is on a par or faster at lower resolutions.

I wonder where the gaffer "artist" has gone. I remember him being a staunch supporter of AMD in my lurking days.

Such news would probably bring a smile to his face.

AMD seems to be back with a vengeance and the GPU landscape will only benefit from that.

I wonder where the gaffer "artist" has gone. I remember him being a staunch supporter of AMD in my lurking days.

Such news would probably bring a smile to his face.

AMD seems to be back with a vengeance and the GPU landscape will only benefit from that.

Artist trolled too much for his own good. Pretty sure he's perma'd

Makes sense. At some point I put him on my ignore list. Whenever I do that I usually see a few hidden posts here and there from those people, and then at some point they just stop.Artist trolled too much for his own good. Pretty sure he's perma'd

Hmmm, wachie is on my ignore list now...Shame. Oh well wachie will take his place.

Because people are never talking about "poor" CPU optimization when they discuss AMD drivers, they're talking about graphical performances, graphical glitches, "missing features" (supersampling, PhysX, you name it) or game crashes.

Those (outside of PhysX which Nvidia just bought out anyway) are mostly a myth.

Probably me but why does it say DDR when it's supposed to say GDDR?

anyone that values their time and patience knows AMD drivers are a joke

Only 4GB? That's so disappointing considering I recently bought a 4k monitor for 4k gaming.

This is the reason why. For now they're using the first generation of HBM which allows 1GB stacks, generation two is rumored to be ready by next year for their 400 series. In theory they could add more stacks, but the problem would be the interposer (the thing connecting these RAM stacks to the GPU) being too big or too expensive to make. I assume more stacks also means a bigger and more complex GPU because it still needs to communicate with the RAM.

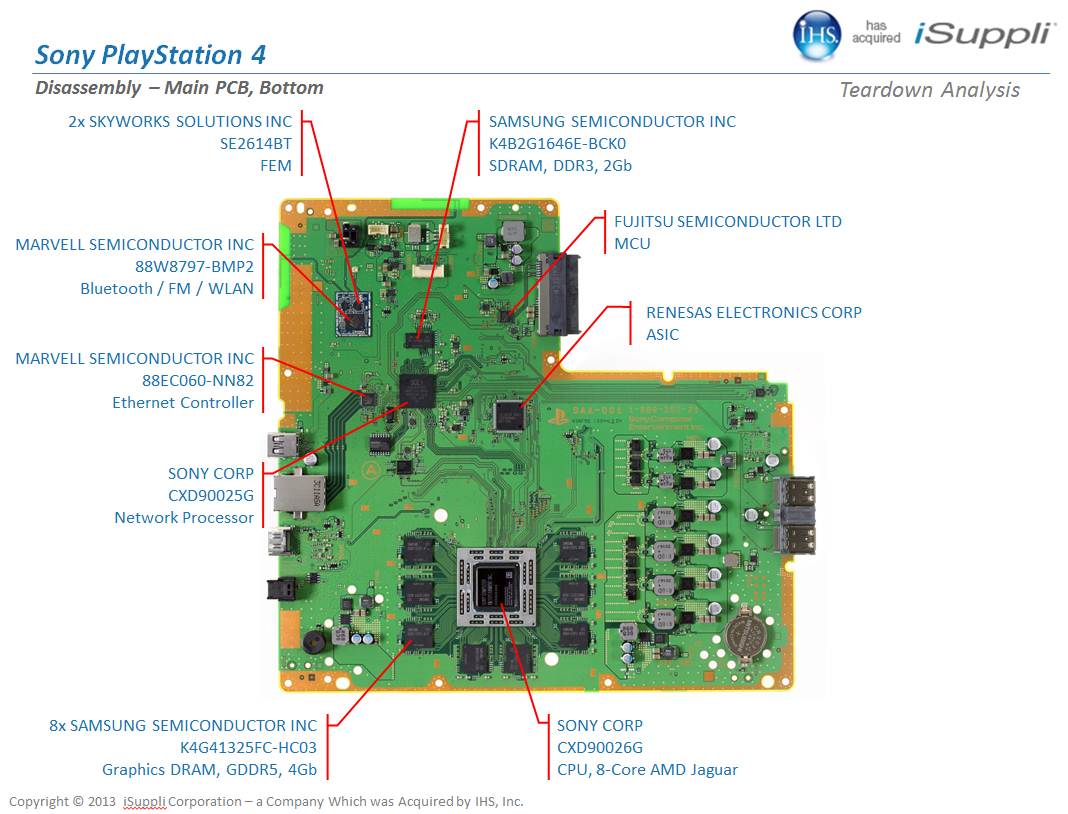

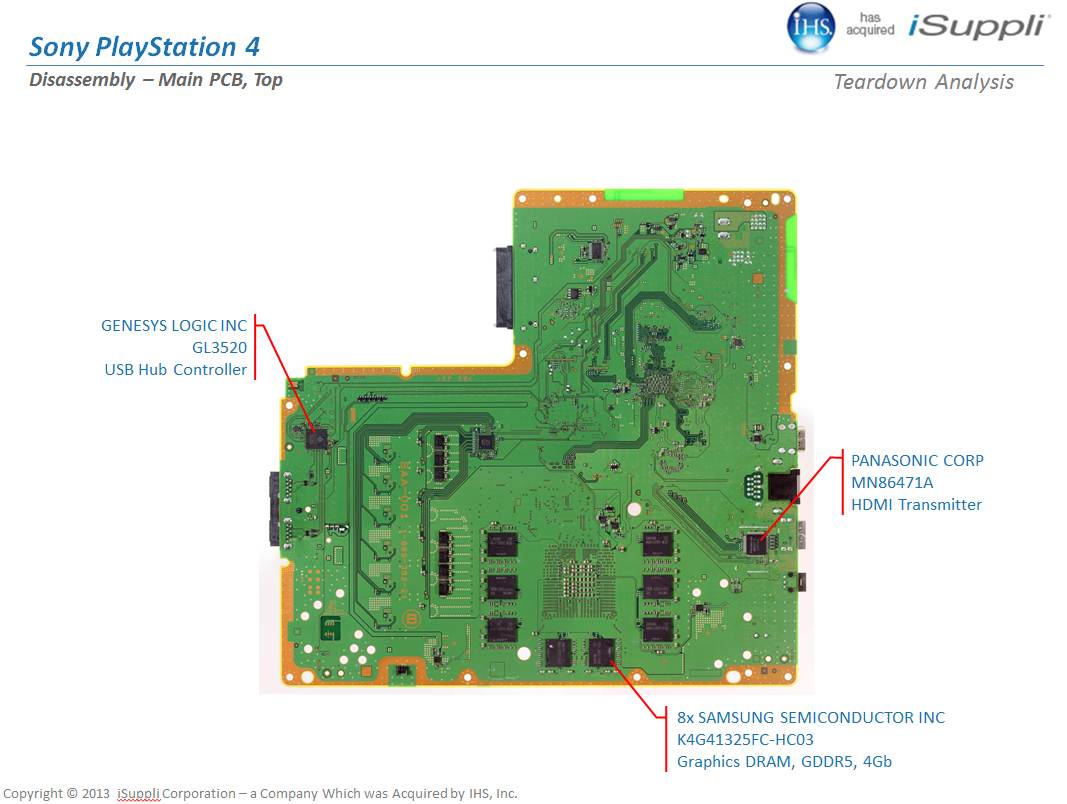

If this is the case how come the PS4 got 8GB and why couldn't they use that technology for a graphics card? Also isn't there a 6GB graphics card already out by Nvidia?

Does Q2 2015 refer to the months of January, February, and March?

Does Q2 2015 refer to the months of January, February, and March?

I wonder where the gaffer "artist" has gone. I remember him being a staunch supporter of AMD in my lurking days.

Such news would probably bring a smile to his face.

AMD seems to be back with a vengeance and the GPU landscape will only benefit from that.

No sorry. I'm a Nvidiot through and through, he is an AMD fan.I thought you were artist second account. Same style on the avatars but with less trolling.

If this is the case how come the PS4 got 8GB and why couldn't they use that technology for a graphics card? Also isn't there a 6GB graphics card already out by Nvidia?

If this is the case how come the PS4 got 8GB and why couldn't they use that technology for a graphics card? Also isn't there a 6GB graphics card already out by Nvidia?

If this is the case how come the PS4 got 8GB and why couldn't they use that technology for a graphics card? Also isn't there a 6GB graphics card already out by Nvidia?

Last rumours were pointing to 290X or higher levels of TDP and water cooling. However TDP is never a concern for enthusiast segment.I'd also expect these chips to not be that power efficient. Clock for clock Nvidia GPUs currently use a lot less power. I doubt first-gen HBM will bring down the power usage that much considering it is AMD at the helm. Like mantle, it will cover up AMD's deficiencies til its competitors have their answer.

Last rumours were pointing to 290X or higher levels of TDP and water cooling. However TDP is never a concern for enthusiast segment.

WhatYearIsIt.jpg

I would like to upgrade to a 390, but I currently have an HD 7970 with 6 GB of VRAM. I am not sure whether or not losing 2 GB of VRAM would negatively impact my performance, or if it even matters because games don't seem to use that much VRAM at this point.

The linkedin profile mentioned 300w TDP. But as we all know TDP is not actual power consumption.

A 290x at stock didn't consume 290w and neither did some overclocked models :

http://www.techpowerup.com/reviews/Sapphire/R9_290X_Tri-X_OC/22.html

No it doesn't. And the Nvidia cards at peak consume more than their specified specs. Notably some of the non-reference cards manage to keep power down at OC because they also cool the gpu better. Running silicon at lower temperatures reduces its leakage power consumption.

The issue here is that many benchmarks do full system power consumption measurements (such as AT). They're not entirely wrong for doing it as Nvidia cards do have lower overheads on the rest of the system even if they aren't as efficient as stated on paper.

I don't really know how Nvidia calculate their TDP but it seems to vary from generation to generation. Maxwell cards have impressive perf/watt still, but their advertised TDP are sometimes exceeded.