Buggy Loop

Member

Kind of missed this, but I saw the annoying voice dude two minute papers talking about it today..

If you can tolerate his voice

If you want Nvidia's video on it.

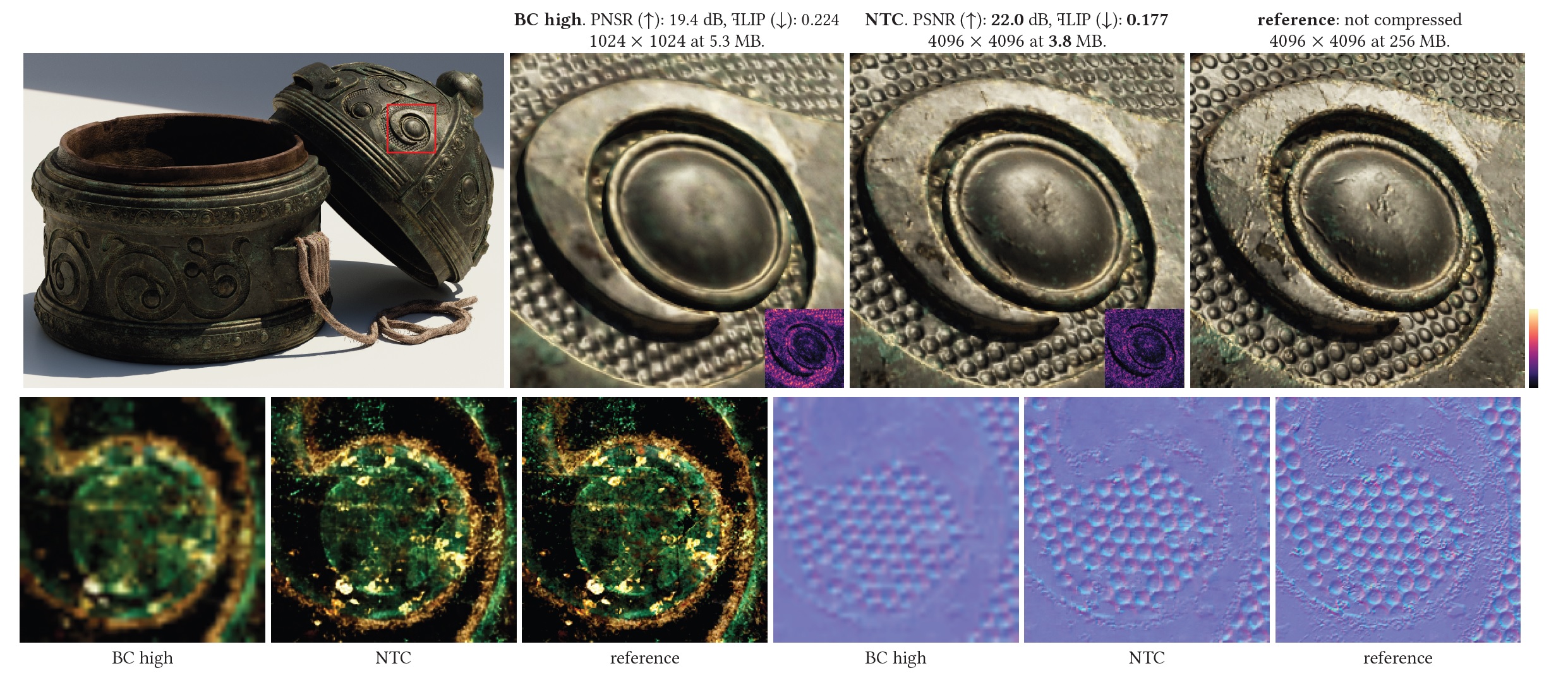

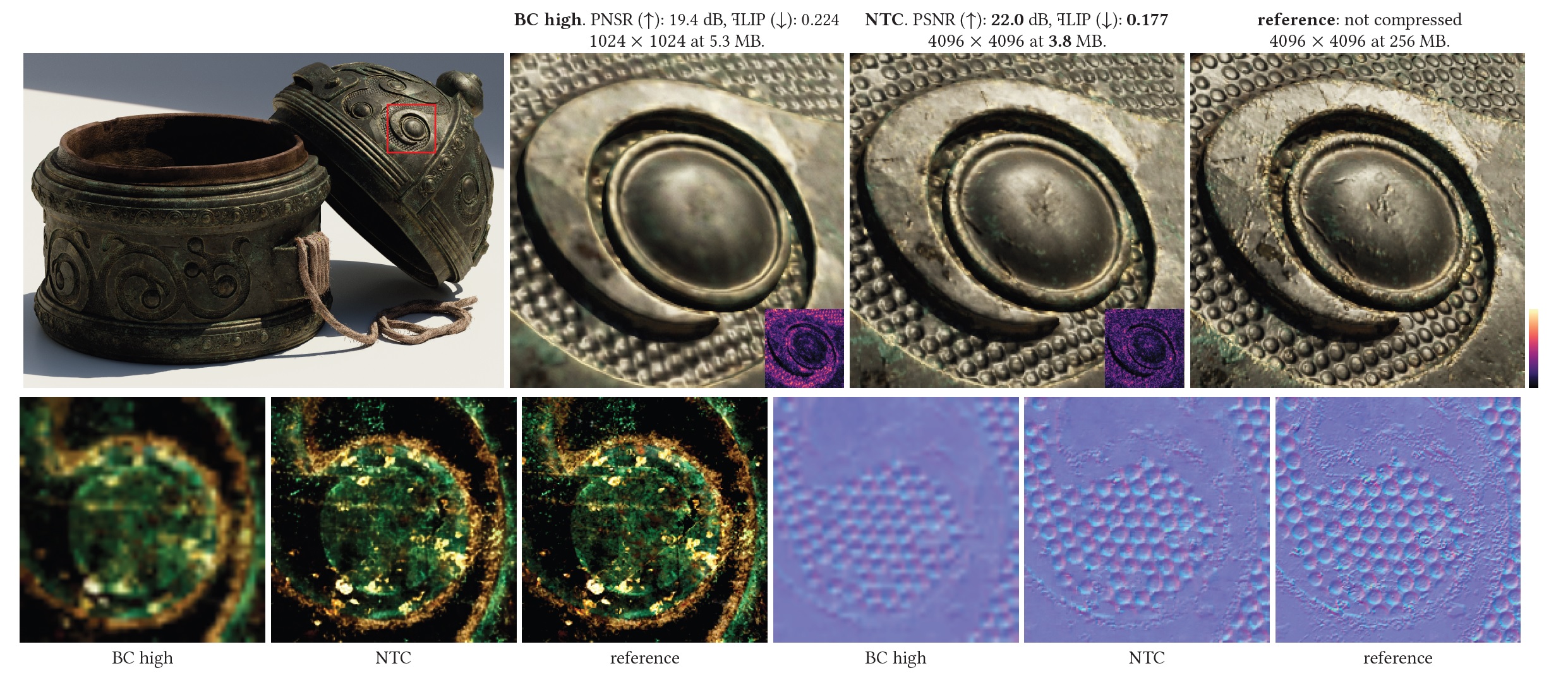

Compression techniques even nowadays are still based on on S3 Graphics's iteration (remember that company? One of the many in the early days along the 3DFX, etc), later renamed BC in directX and then many iterations BCx versions. Basically block truncation coding with color cell compression. The later versions were made to store alpha, normal maps, HDR, etc.

Everything will be AI in the future.

Imagine if Switch 2 uses this, motherfucking game changer. To match previous technique you would just save so much space, smaller downloads, cartridge friendly, and saves a huge amount on hardware bandwidth.

If you can tolerate his voice

If you want Nvidia's video on it.

Compression techniques even nowadays are still based on on S3 Graphics's iteration (remember that company? One of the many in the early days along the 3DFX, etc), later renamed BC in directX and then many iterations BCx versions. Basically block truncation coding with color cell compression. The later versions were made to store alpha, normal maps, HDR, etc.

Everything will be AI in the future.

Imagine if Switch 2 uses this, motherfucking game changer. To match previous technique you would just save so much space, smaller downloads, cartridge friendly, and saves a huge amount on hardware bandwidth.

Last edited: