mckmas8808

Mckmaster uses MasterCard to buy Slave drives

10 days ago.

I'm so confused by this leaker. Why does it say 12 GBs of DDR4 RAM usable for games and 20 GBs of RAM usable for games?

10 days ago.

I'm so confused by this leaker. Why does it say 12 GBs of DDR4 RAM usable for games and 20 GBs of RAM usable for games?

It's no different than optimizing for PC at this point. More toggles and switches for more effects and resolutions. Xbox One X games look phenomenal, but that doesn't mean the Xbox One held any games back. They just enable more settings and provide 4k Asset packs.

I would rather have devs have a top tier baseline, pushing a Crysis (as it it would not even run on the 4TF regardless of same CPU and resolution), taking advantage of all that power, than scaling something that looks cross gen/mid gen refresh from day zero (all gen long) with only a resolution boost and added minor tweaks to shadows, etc. due to a handicap.

And what happens if there are mid gen refreshes 3-4 years in again? Even more redundancy of power or an ass'ed out 4TF system?

8 GB HBM2 + 12 GB DDR4 = 20 GB RAM in total. A combination of "fast" and "slow" RAM that the SDK manages automatically. Developers may manage how to distribute the use of both memory pools.I'm so confused by this leaker. Why does it say 12 GBs of DDR4 RAM usable for games and 20 GBs of RAM usable for games?

So, why is Sony going for the split memory pool? Wouldn’t it be better to have just a unified GDDR6 memory? The spilt memory pool reminds me of XB1.

Yea, it’s weird. I can’t wait till everything is released so we can get a better understanding.And I thought bandwidth was key in a console. Why go with HBM2 is the bandwidth is half of what it needs to be?

And I thought bandwidth was key in a console. Why go with HBM2 is the bandwidth is half of what it needs to be?

But the benefit of a console is that it's "NOT" like developing for a PC. Who cares about scaling when you are making an exclusive game for one console? And yes Xbox One X games were held back by the Xbox One S. Any exclusive X1X title would look miles better than they do now if they didn't have to support the "S".

The pool for games is 8GB HBM2 + 12GB DDR4.I'm so confused by this leaker. Why does it say 12 GBs of DDR4 RAM usable for games and 20 GBs of RAM usable for games?

There is no split... it is a single pool of memory.So, why is Sony going for the split memory pool? Wouldn’t it be better to have just a unified GDDR6 memory? The spilt memory pool reminds me of XB1.

Not the SDK... HBCC is at hardware level... the APU directly see it like a single memory pool.8 GB HBM2 + 12 GB DDR4 = 20 GB RAM in total. A combination of "fast" and "slow" RAM that the SDK manages automatically. Developers may manage how to distribute the use of both memory pools.

Both memory pools are shared between the CPU and GPU.

This sounds interesting.

Can you explain to me what HBCC is? I asked before...The pool for games is 8GB HBM2 + 12GB DDR4.

There is no split... it is a single pool of memory.

Not the SDK... HBCC is at hardware level... the APU directly see it like a single memory pool.

Can you explain to me what HBCC is? I asked before...

techgage.com

techgage.com

I did not see...Can you explain to me what HBCC is? I asked before...

What is HBCC?

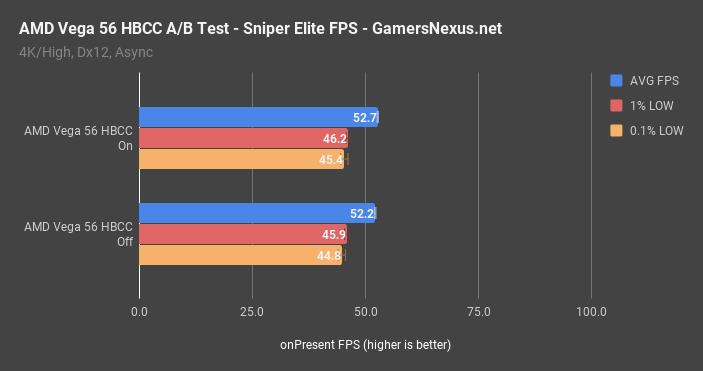

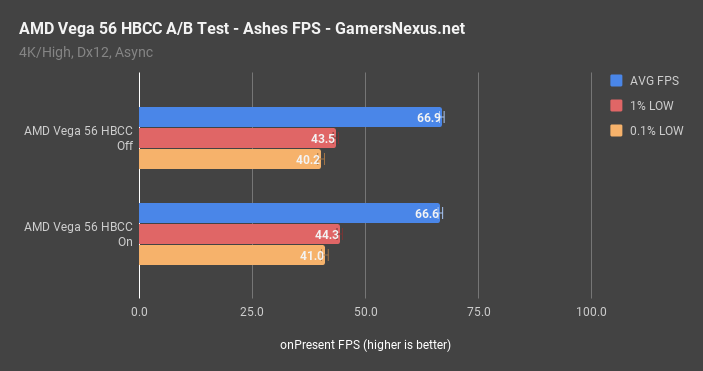

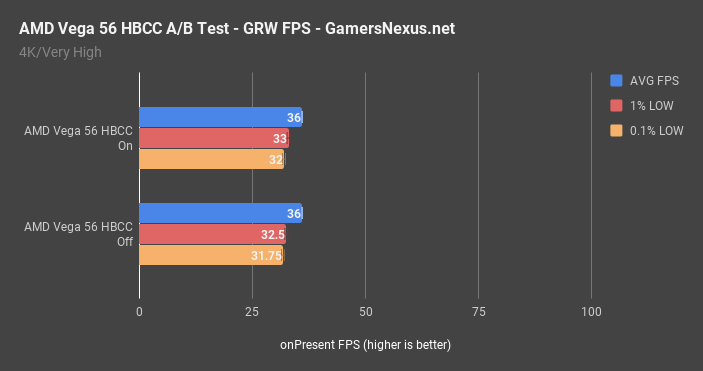

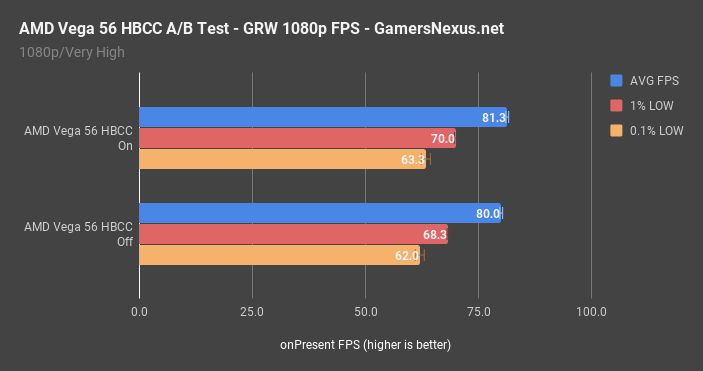

HBCC is the controller for AMD’s high-bandwidth cache, what the company has functionally renamed from VRAM. There is no hard threshold as to what governs the naming designation of “high-bandwidth cache,” and should AMD produce a hypothetic GDDR5 Vega GPU, its framebuffer would also be named “high-bandwidth cache.” The card does not need HBM to have its framebuffer designated as HBC, in other words.

AMD’s High-Bandwidth Cache Controller is disabled by default. When enabled, the controller effectively converts VRAM into a last-level cache equivalent, then reserves a user-designated amount of system memory for allocation to the GPU. If the applications page-out of the on-card 8GB of HBM2, a trade-off between latency and capacity occurs and the GPU taps-in to system memory to grab its needed pages. If you’re storing 4K textures in HBM2 and exceed that 8GB capacity, and maybe need another 1GB for other assets, those items can be pushed to system memory and pulled via the PCIe bus. This is less effective than increasing on-card memory, but is significantly cheaper than doing so – even in spite of DDR pricing. Latency is introduced by means of traveling across the PCIe interface, through the CPU, and down the memory bus, then back, but it’s still faster than having to dump memory and swap data locally.

Same thing that happens to the PS4, PS4 Slim, PS4 Pro, and the upcoming PS4 Slim 7nm. They receive cross-gen games for year or 2 and then on to xCloud life support afterwards.Fine, but then what happens to the One X? Do they kill it given you expect nextgen to be backwards compatible?

My fundamental point on all of this is that bringing 2 new consoles on the market in addition to the existing One, One S, One X, SAD edition, etc makes for potential confusion. Marketing that lot could be a real challenge.

Devs have been wrestling with nasty setups on console the whole of this gen with XO's weird memory setup, but it's hard for them to detect the system and select 1080p output instead of 4K?Exactly! I don't think people honestly realize how making games for a console is different than a PC. The mindset is different. If you are a dev and can design the game from the ground up to only work on a console that has 14 TFs of power, it'll be designed differently than if you had to support a 4TF console.

This thread is a locomotive that runs 24hrs a day 7 days a week. Choo Choo!

Maybe, but the Xbox One X and the Xbox One S are fairly different. XOS being 1.4TF with significantly slower RAM and the XOX at 6TF with significantly faster RAM. That's a very big difference, but that's also the difference it needs to allow current generation games to hit 4K. The difference for next-gen will be graphics at 1080p or 4k and give or take a few effects and toggles. The games will most likely look prettier on the beefed up system kind of like how games scale on a PC.

These consoles are becoming more PC like in architecture, but they are still focused on games wheras the PC is a multi-tool. But to think they can't scale like the do now is a little naive, especially because all first party will end up on PC anyway and all third party will usually have PC ports.

EDITED HEAVILY.

Not the SDK... HBCC is at hardware level... the APU directly see it like a single memory pool.

The key point is this pool is accessed by 2 memory controller so the issue with PS4 where CPU usage affect GPU access doesnt exists anymore.

This thread is a locomotive that runs 24hrs a day 7 days a week. Choo Choo!

PS4 games will run native without emulator... the OS/Game/App didn't know there is 2 physical memory pool... they know there is only one virtual memory pool like PS4.I wonder if they had to create a smart emulator to allow the PS5 to do the bolded for PS4 games.

PS4 games will run native without emulator... the OS/Game/App didn't know there is 2 physical memory pool... they know there is only one virtual memory pool like PS4.

PS4: 5.5GB available for games

PS5: 20GB available for games

What Sony will do is the same they did with PS4 Pro... they will make PS4 games to use the CPU/GPU/RAM limited.

Of course they will need to work in a Boost Mode like they did on PS4 Pro to use fully the hardware on PS5.

Edit - Just to not make confusion lol I don't know what PS5 is... I'm just basing that in the rumor that uses the HBCC feature.

Interesting. But still... that DDR4 would be limited to the (supposed) 102GB/s, no? That's not great tbhI did not see...

But it is better to copy from others sites:

In simple terms HBCC makes all the SystemRAM + VRAM to be saw as a single memory pool by the GPU (or APU).

So when the GPU uses all the 8GB HBM2 it automatically start to use the DDR4.

That is at hardware level.

It is basically the biggest new feature that Vega added to the table.

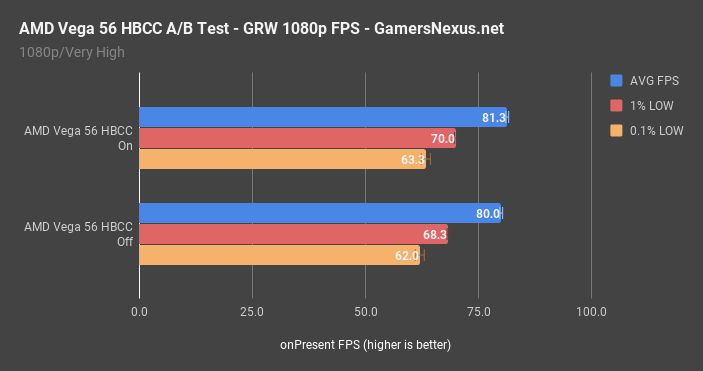

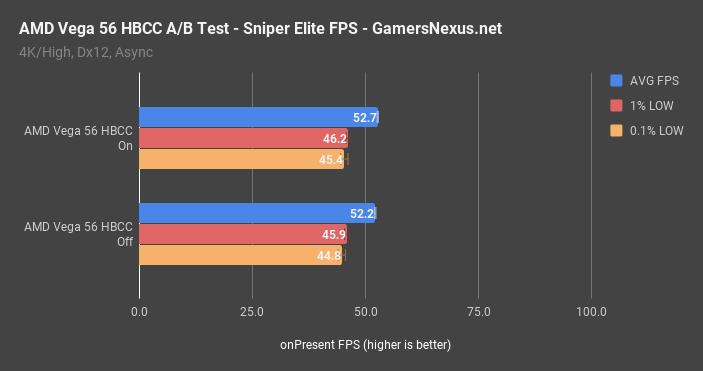

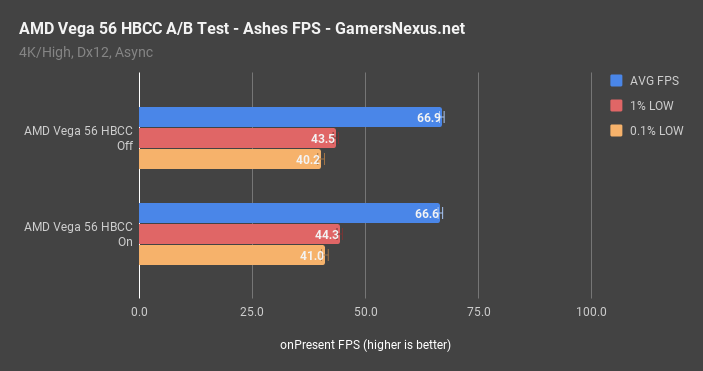

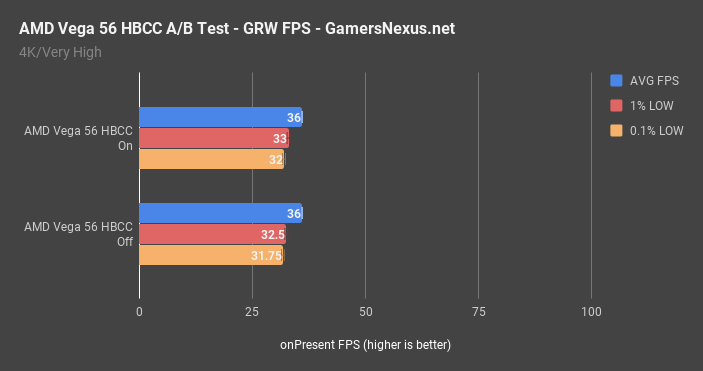

Well benchmarks on PC shows it did not lose performance when using more RAM from the DDR4.Interesting. But still... that DDR4 would be limited to the (supposed) 102GB/s, no? That's not great tbh

It's not worthlessI'm not worried about pretty graphics for the most part. It's gonna be the guts(AI, etc) that are important to me.

But yes, a midgen refresh is very likely with this gen as well.

Textures and shadows are much more scalable than AI.

Just my worthless opinion.

HBM2 is better than GDDR6 in both latency access and speeds with the right bus.It's not worthless

I'm happy with 13tf

Regarding the HBCC rumor, these are the key points we should be looking at:

It looks like an advanced way of doing things and sounds impressive on paper. The last part answers the question of why not gddr6 instead.

- memory automatically managed by HBCC and appears as 20 GB to the developers

- HBCC manages streaming of game data from storage as well

- developers can use the API to take control if they choose and manage the memory and storage streaming themselves

- memory solution alleviates problems found in PS4

- namely that CPU bandwidth reduces GPU bandwidth disproportionately

- 2 stacks of HBM have 512 banks (more banks = fewer conflicts and higher utilization)

- GDDR6 better than GDDR5 and GDDR5x in that regard but still less banks than HBM

HBM2 is better than GDDR6 in both latency access and speeds with the right bus.

The only issue is PRICE.

GDDR6 is cheaper to buy and implement... HBM2 is expensive to buy and implement.

But for future HBM3 that will be cheaper than GDDR6 will be out in 2020 with the same better latency and speeds of HBM2 while GDDR7 won't come before 2025?

Damn I had no idea about that... it looks really good!Well benchmarks on PC shows it did not lose performance when using more RAM from the DDR4.

AMD Vega 56 HBCC Gaming Benchmarks: On vs. Off

AMD’s High-Bandwidth Cache Controller protocol is one of the keystones to the Vega architecture, marked by RTG lead Raja Koduri as a personal favorite feature of Vega, and highlighted in previous marketing materials as offering a potential 50% uplift in average FPS when in VRAM-constrained...www.gamersnexus.net

That is base of the whole rumor... HBM2 and HBM3 are 100% compatibles so Sony got a big deal with HBM2 right now and in one or two years they will shift to HBM3 to cut costs.Is it possible Sony can use HBM3 on future PS5s to replace the HBM2 for cost savings?

That is base of the whole rumor... HBM2 and HBM3 are 100% compatibles so Sony got a big deal with HBM2 right now and in one or two years they will shift to HBM3 to cut costs.

The bandwidth is to be expected because HBM is fairly new tech, with HBM3 and onwards I’m sure the bandwidth is going to get better and better.Man that's amazing and crazy good foresight by Sony if true. I'm still saddened by the low 400+ GB/s bandwidth of HBM2 though.

Who is he?Personally I'm interested to know how our old buddy Leocarian who was banned here and fled to era, is now banned over there.

Who is he?

Oh, why did he get banned?Self proclaimed "insider" who was verified over at era.

The bandwidth is to be expected because HBM is fairly new tech, with HBM3 and onwards I’m sure the bandwidth is going to get better and better.

Yup. >600GB/s bandwidth or bust.

You can’t have everything! The PS5, and the Next Xbox will be good systems. Enjoy whichever and the games will look great.But Sony will not able to take advantage of the PS5s with HBM3, since it will not be in every PS5 (since the lauch version will have HBM2).

Well a couple things....

1. GPU processing will be used on a whole lot more things than just resolution and basic graphics. The difference in a 4 TF console and a 14 TF console is HUGE! Nobody is using 10 TFs worth of power just to add a couple of effects and boost the resolution to 4K.

2. And how much time and resources will these "extra effects" get from developers when 40% of the Xbox Next fanbase don't even have the Anaconda version, because they have Lockhart? There will be features in whole game engines that will be created due to the amount of power these next-gen systems will have. Example Look at Dreams. What they do in Dreams is impossible with current traditional rasterization in GPUs. They needed to do a compute shading based rendering engine.

It allows you to create a game engine that can allow a game to look like all of the below. These 4 screenshots have no polygons at all. The engine doesn't use polygons like 99.9% of all other games.

I wonder if they had to create a smart emulator to allow the PS5 to do the bolded for PS4 games.

Yup. >600GB/s bandwidth or bust.

If this is true they will once again cripple the crap out of next gen. You won't get devs developing new rendering pipelines and games for the 12tf machine. They will develop for 4tf and just boost dynamic resolutions and fps because it is the easiest thing to do. Next gen would just be the 4tf machine but with the option of higher res and fps if you pay through the teeth.You could probably get it down a little bit on a closed system like a console, hense why both Xbox's are rumoured to be 4tf and 12tf.

If this is true they will once again cripple the crap out of next gen. You won't get devs developing new rendering pipelines and games for the 12tf machine. They will develop for 4tf and just boost dynamic resolutions and fps because it is the easiest thing to do. Next gen would just be the 4tf machine but with the option of higher res and fps if you pay through the teeth.

If this is true they will once again cripple the crap out of next gen. You won't get devs developing new rendering pipelines and games for the 12tf machine. They will develop for 4tf and just boost dynamic resolutions and fps because it is the easiest thing to do. Next gen would just be the 4tf machine but with the option of higher res and fps if you pay through the teeth.

That's what I'm saying. I want Crysis up in this bitch on 12TF that won't run on 4, I don't want your low to high Fortnite scaling (hyperbole obviously).

Why does this have to keep being repeated ad nauseam? The rumors talk of a 4tf 1080p machine and a 12tf 4K machine, they use the same base architecture therefore they will both take advantage of new rendering pipelines. In fact, the 4tf machine should have a little extra headroom at 1080p than the 12tf machine at 4K! It’s like the difference between a 1060 and a 1080. Now, if we are talking of the 12tf machine rendering at 1080p that’s a horse of a different color.

GTX 2080 is stronger than anything you will see on next-gen consoles...400 GB/s for bandwidth seems way too low considering the size of the GPU and the size of the RAM in the console. Seems like it's actually NOT balanced.