TheRedRiders

Member

Deep Learning Super Soap

That's RTX Blur, which realistically blurs textures depending on the weather and lighting /s

Last edited:

Deep Learning Super Soap

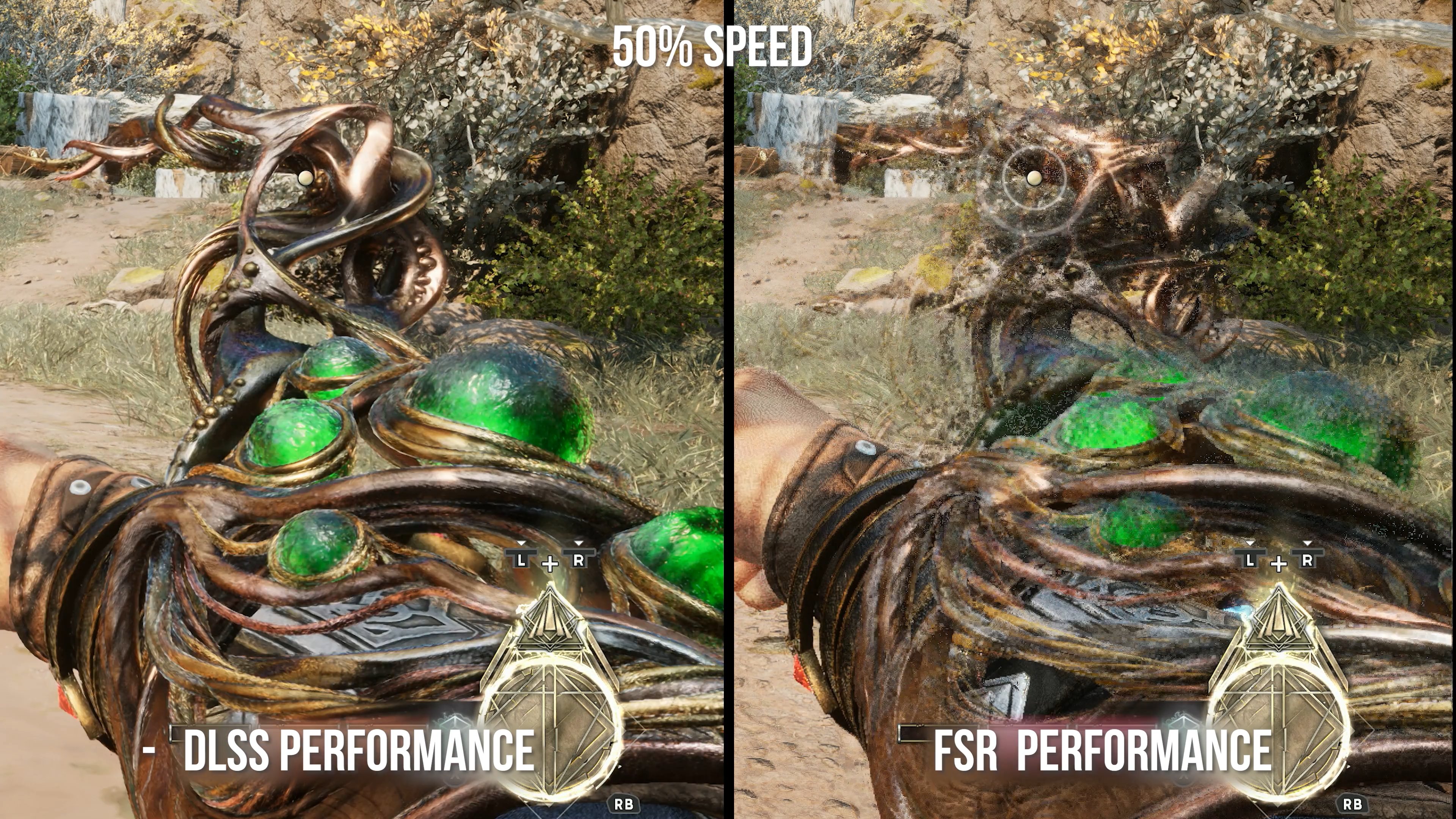

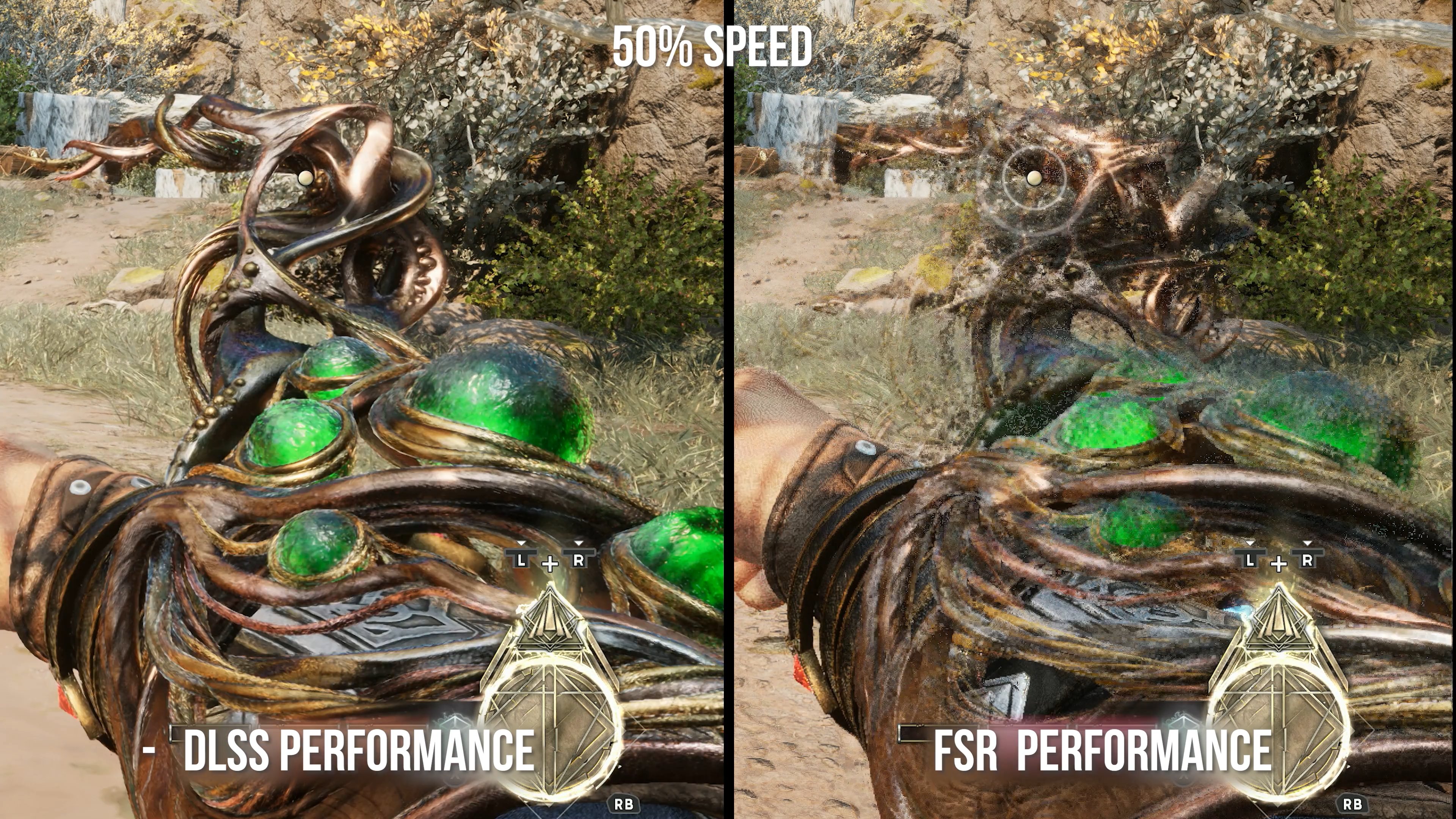

Immortals of Aveum: DLSS Performance vs. FSR

In real scenario, you won't notice. You can notice only sharp or soap picture.FSR 2.2 Quality mode is fantastic and super close to DLSS outside of a few fringe scenarios that almost no one would notice in normal play anyway.

In real scenario, you won't notice. You can notice only sharp or soap picture.

in Ghostwire FSR2 was superior implementation, better than Native, in my own testother than that picture quality is worse with FSR.

Show any game where DLSS Quality (at 1440p and above) is looking worse than native with TAA? Especially in motion.cool story bro

FSR 2.2 Quality at 1440p is not fantastic and still not close to DLSS Quality at 1440p.FSR Performane mode is not good, but FSR 2.2 Quality mode is fantastic and super close to DLSS outside of a few fringe scenarios that almost no one would notice in normal play anyway.

Yeah because FSR has a sharpening filter applied and DLSS does not. When it comes to literally everything else DLSS is superior.Deep Learning Super Soap

Deep Learning Super Soap

Immortals of Aveum: DLSS Performance vs. FSR

lolShow any game where DLSS Quality (at 1440p and above) is looking worse than native with TAA? Especially in motion.

FSR 2.2 Quality at 1440p is not fantastic and still not close to DLSS Quality at 1440p.

FSR 2.2 Quality at 1440p renders at an even lower resolution than 4K Performance.

DLSS2 still holds up well at 1440p Quality.

Id broadly agree on Xess being better than FSR 90% of the time. There are some games that do better with FSR but those are a minority.

If they can improve Xess further, it'll be very close to DLSS. I think AMD chose to focus more on FSR 3 the entire year though.

They can't really catch up on the upscaling because they don't use AI/ML. No mathematical algorithm will ever be able to match the use of AI in the upscaler.AMD was so focused on trying to catch up to nvidia with frame generation, the forgot they still had to catch up to nvidia in temporal upscaling.

They can't really catch up on the upscaling because they don't use AI/ML. No mathematical algorithm will ever be able to match the use of AI in the upscaler.

But they can use AI for an upscaler. Even if it's just DP4A like with XeSS.

For some unknown, stupid reason, they refuse to use it.

Yes. It uses ML on Intel Arc hardware. XeSS uses the XMX units to upscalge using Int 8. On older Intel integrated GPUs, it uses DPa4. On Non-intel GPUs, it uses an SM 6.4 path. Last we checked though, XeSS performed quite a bit better on Intel Arc GPUs than on NVIDIA or AMD thanks to using dedicated hardware to reduce rendering time.does xess use ai? because on a 4080 xess >>>>>>> fsr

Might be to keep as close to dlss performance wise at the same presets, they might think people probably don't notice the image quality differences but if their ai/ml upscaler runs 10fps less than dlss at the same base res/preset that data is easier to show/notice in online benchmarks etc.For some unknown, stupid reason, they refuse to use it.

Might be to keep as close to dlss performance wise at the same presets, they might think people probably don't notice the image quality differences but if their ai/ml upscaler runs 10fps less than dlss at the same base res/preset that data is easier to show/notice in online benchmarks etc.

The DP4a pass seems to run noticeably worse than FSR or DLSS at the same quality in a lot of games though, especially on AMD hardware. It's not so bad on NVIDIA hardware.But they can use AI for an upscaler. Even if it's just DP4A like with XeSS.

For some unknown, stupid reason, they refuse to use it.

The DP4a pass seems to run noticeably worse than FSR or DLSS at the same quality in a lot of games though, especially on AMD hardware. It's not so bad on NVIDIA hardware.

And you just answered your own question, did you not?That's because DP4A is just an extension for Matrix operations done in the shaders. So when doing AI, it will meant some shaders can't be used for normal compute operations.

While on the Arch and Nvidia, there are dedicated Tensor Units to deal with Matrix operations.

And you just answered your own question, did you not?

Edit: Also, wait, no. It uses the shader model 6.4 path on non-Intel GPUs. DP4a is only used on Intel integrated GPUs. Has this changed with 1.2?

Strange because it was my understanding that DP4a was strictly for Intel integrated GPUs (which I found really bizarre).I didn't have any question. I was just elaborating on your statement.

XeSS will run on XMX cores for newer Intel CPUs that have these cores.

It will run on DP4A on GPUs that support it, like RDNA2 and later. And Pascal and later.

For other GPUs, it will use SM 6.4

Strange because it was my understanding that DP4a was strictly for Intel integrated GPUs (which I found really bizarre).

I know DF said there were three kernels that worked as you said but there was a separation between DP4a and SM 6.4 support. Maybe it was a misunderstanding or their part or it got updated? Regardless, I never thought it made a lot of sense to reserve the DP4a path strictly for Integrated Intel GPUs when more recent GPUs also support it.

Okay, so I looked at the documentation and you should be correct.DP4A path was always intended as a secondary path for all GPUs without XMX units.

Okay, so I looked at the documentation and you should be correct.

An HLSL-based cross-vendor implementation that runs on any GPU supporting SM 6.4. Hardware acceleration for DP4a or equivalent is recommended.

It says "any" GPU supporting SM 6.4 which dates from times immemorial and that hardware acceleration for DP4a is recommended and as you noted, this has been available since Pascal and RDNA2 for NVIDIA and AMD respectively. Wouldn't make a single bit of sense to exclude the DP4a path from GPUs that support it and strictly leave it for Intel integrated GPUs. DF probably misunderstood Intel or Intel wasn't clear. Or maybe this got updated but I doubt it. Would have been stupid.

True but they had a direct channel to Intel for that one. Intel sponsored the XeSS video so DF was actually in contact with them. They're one of the few outlets that can consistently get answers straight from the horse's mouth which is incredibly valuable.Truth be told, DF is far from being the most accurate source for tech news and info.

True but they had a direct channel to Intel for that one. Intel sponsored the XeSS video so DF was actually in contact with them. They're one of the few outlets that can consistently get answers straight from the horse's mouth which is incredibly valuable.

Yep, but what can you do? It's that or nothing. At least we can cross-reference their reports with what's publicly available.And despite having a direct contact with Intel, they still screw up.

That's why I said that DF is far from being the most accurate source for tech info.

Yep, but what can you do? It's that or nothing. At least we can cross-reference their reports with what's publicly available.

But yeah some of the shit they get wrong make me scratch my head sometimes lol.

I can't count that many to be honest. GN and HU for instance are quite a bit better for analyzing. As is de8auer and a handful of youtubers but their pieces are often not as in-depth and more generalized. They also tend to focus much more on hardware than software and seldom get backing or interviews from the devs. Most are also PC-centric so there isn't that much to go by for console coverage.Plenty of tech sites and channels that do a much better job than DF.

I can't count that many to be honest. GN and HU for instance are quite a bit better for analyzing. As is de8auer and a handful of youtubers but their pieces are often not as in-depth and more generalized. They also tend to focus much more on hardware than software and seldom get backing or interviews from the devs. Most are also PC-centric so there isn't that much to go by for console coverage.

DF got to sit down with Bluepoint, Nixxes, Intel, CDPR, Turn 10, and a bunch of others and ask them questions that interest us.

If you do have more that I might not be aware of, send them my way and I'll give them a gander.

Yeah, I regularly have been following those for a few years. Chips and Cheese more recently. They're excellent for in-depth coverage but they have a very "developer" like approach to their conclusion. As in, it seems that they can never see developers screwing up.GN and HU are first hardware sites. Not so much centered on games. But they do a lot better coverage for benchmarks and tech than DF.

Techpowerup also has specific performance game analysis, including upscalers comparisons.

Then there is Chips and Cheese, probably the most in-depth coverage of hardware and how the software runs on it.

For comparing some games, DF still does a decent job. But to talk about tech, they are lacking.

Yeah, I regularly have been following those for a few years. Chips and Cheese more recently. They're excellent for in-depth coverage but they have a very "developer" like approach to their conclusion. As in, it seems that they can never see developers screwing up.

For instance, they said that there was nothing wrong with Starfield's performance on NVIDIA hardware when typically, AMD equivalent parts crushed them by like 35%. Lo and behold, with that big beta patch, NVIDIA is now much closer to AMD. AMD still runs better but by like 10-15%, not the outrageous performance differential there was before. Now I think the 4090 is substantially faster than the 7900 XTX whereas before, they were almost equal.

I don't think this is true, at least not entirely, FSR2 as a technology is definitely better than most solutions used back then, especially PS4s checkerboard upscaling.I hate how some console games announce their game is using FRS, like that's some sort of win. Upscaling and checkerboard rendering was much better on PS4Pro and XB1X, we have gone backwards.

I wonder if this is due to resolution and not the implementation? Checkerboard on PS4 would use a 1440 or higher source. Games are now rendering as low as 720, so it's going to look much worse even with a superior upscaler.I hate how some console games announce their game is using FRS, like that's some sort of win. Upscaling and checkerboard rendering was much better on PS4Pro and XB1X, we have gone backwards.

No, they weren't. Checkerboard was just typically operating at a much higher internal resolution because the target fps was often 30 anyway. Typically, checkerboard rendering used 1920x2160 to upscale to 4K. That's much, much higher than games using FSR2 to upscale to 4K from a 1600x900 resolution.I hate how some console games announce their game is using FRS, like that's some sort of win. Upscaling and checkerboard rendering was much better on PS4Pro and XB1X, we have gone backwards.

It was always supported through SM 6.4.Okay, so I looked at the documentation and you should be correct.

An HLSL-based cross-vendor implementation that runs on any GPU supporting SM 6.4. Hardware acceleration for DP4a or equivalent is recommended.

It says "any" GPU supporting SM 6.4 which dates from times immemorial and that hardware acceleration for DP4a is recommended and as you noted, this has been available since Pascal and RDNA2 for NVIDIA and AMD respectively. Wouldn't make a single bit of sense to exclude the DP4a path from GPUs that support it and strictly leave it for Intel integrated GPUs. DF probably misunderstood Intel or Intel wasn't clear. Or maybe this got updated but I doubt it. Would have been stupid.