Wow, there are lots of posts here, so I'll only respond to the last one. The interest in this subject is higher then we thought. The primary evolution of the benchmark is for our own internal testing, so it's pretty important that it be representative of the gameplay. To keep things clean, I'm not going to make very many comments on the concept of bias and fairness, as it can completely go down a rat hole.

Certainly I could see how one might see that we are working closer with one hardware vendor then the other, but the numbers don't really bare that out. Since we've started, I think we've had about 3 site visits from NVidia, 3 from AMD, and 2 from Intel ( and 0 from Microsoft, but they never come visit anyone ;(). Nvidia was actually a far more active collaborator over the summer then AMD was, If you judged from email traffic and code-checkins, you'd draw the conclusion we were working closer with Nvidia rather than AMD wink.gif As you've pointed out, there does exist a marketing agreement between Stardock (our publisher) for Ashes with AMD. But this is typical of almost every major PC game I've ever worked on (Civ 5 had a marketing agreement with NVidia, for example). Without getting into the specifics, I believe the primary goal of AMD is to promote D3D12 titles as they have also lined up a few other D3D12 games.

If you use this metric, however, given Nvidia's promotions with Unreal (and integration with Gameworks) you'd have to say that every Unreal game is biased, not to mention virtually every game that's commonly used as a benchmark since most of them have a promotion agreement with someone. Certainly, one might argue that Unreal being an engine with many titles should give it particular weight, and I wouldn't disagree. However, Ashes is not the only game being developed with Nitrous. It is also being used in several additional titles right now, the only announced one being the Star Control reboot. (Which I am super excited about! But that's a completely other topic wink.gif).

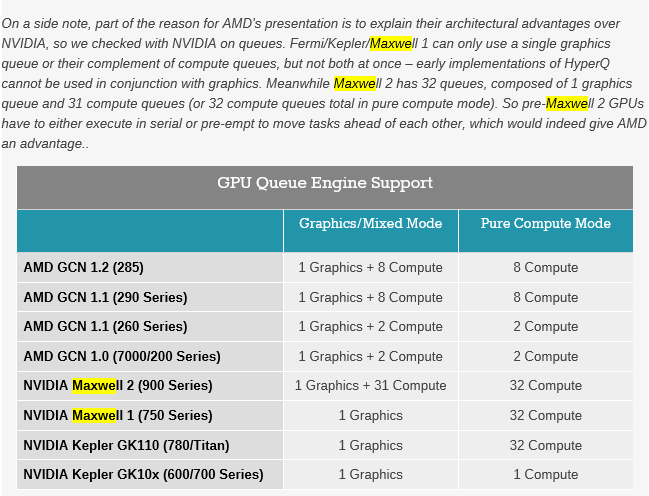

Personally, I think one could just as easily make the claim that we were biased toward Nvidia as the only 'vendor' specific code is for Nvidia where we had to shutdown async compute. By vendor specific, I mean a case where we look at the Vendor ID and make changes to our rendering path. Curiously, their driver reported this feature was functional but attempting to use it was an unmitigated disaster in terms of performance and conformance so we shut it down on their hardware. As far as I know, Maxwell doesn't really have Async Compute so I don't know why their driver was trying to expose that. The only other thing that is different between them is that Nvidia does fall into Tier 2 class binding hardware instead of Tier 3 like AMD which requires a little bit more CPU overhead in D3D12, but I don't think it ended up being very significant. This isn't a vendor specific path, as it's responding to capabilities the driver reports.

From our perspective, one of the surprising things about the results is just how good Nvidia's DX11 perf is. But that's a very recent development, with huge CPU perf improvements over the last month. Still, DX12 CPU overhead is still far far better on Nvidia, and we haven't even tuned it as much as DX11. The other surprise is that of the min frame times having the 290X beat out the 980 Ti (as reported on Ars Techinica). Unlike DX11, minimum frame times are mostly an application controlled feature so I was expecting it to be close to identical. This would appear to be GPU side variance, rather then software variance. We'll have to dig into this one.

I suspect that one thing that is helping AMD on GPU performance is D3D12 exposes Async Compute, which D3D11 did not. Ashes uses a modest amount of it, which gave us a noticeable perf improvement. It was mostly opportunistic where we just took a few compute tasks we were already doing and made them asynchronous, Ashes really isn't a poster-child for advanced GCN features.

Our use of Async Compute, however, pales with comparisons to some of the things which the console guys are starting to do. Most of those haven't made their way to the PC yet, but I've heard of developers getting 30% GPU performance by using Async Compute. Too early to tell, of course, but it could end being pretty disruptive in a year or so as these GCN built and optimized engines start coming to the PC. I don't think Unreal titles will show this very much though, so likely we'll have to wait to see. Has anyone profiled Ark yet?

In the end, I think everyone has to give AMD alot of credit for not objecting to our collaborative effort with Nvidia even though the game had a marketing deal with them. They never once complained about it, and it certainly would have been within their right to do so. (Complain, anyway, we would have still done it, wink.gif)

--

P.S. There is no war of words between us and Nvidia. Nvidia made some incorrect statements, and at this point they will not dispute our position if you ask their PR. That is, they are not disputing anything in our blog. I believe the initial confusion was because Nvidia PR was putting pressure on us to disable certain settings in the benchmark, when we refused, I think they took it a little too personally.

AFAIK, Maxwell doesn't support Async Compute, at least not natively. We disabled it at the request of Nvidia, as it was much slower to try to use it then to not.

Weather or not Async Compute is better or not is subjective, but it definitely does buy some performance on AMD's hardware. Whether it is the right architectural decision for Maxwell, or is even relevant to it's scheduler is hard to say.

http://www.overclock.net/t/1569897/...ingularity-dx12-benchmarks/1200#post_24356995

Parallel me if old.

My G-Sync monitor upgrade is starting to bite me in the ass (I still have an AMD GPU, was waiting for Pascal)