-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Oxide: Nvidia GPU's do not support DX12 Asynchronous Compute/Shaders.

- Thread starter Arkanius

- Start date

In a very simple way, which of course I'll get corrected down the thread:

AMD has been betting on parallel computing and Asynchronous shading since the 7XXX series with GCN. This architecture is the one used in consoles nowadays as well (Xbox One and PS4). Most console games benefit from low-overhead programming and are used closed to spec of their hardware.

Enter Mantle, the low overhead API that AMD wanted to bring to the PC side, this made a huge burn on Microsoft which decided to make DX12 close to what Mantle and AMD were offering. AMD also gave Mantle for free, which is now Vulkan (The future of OpenGL)

So now we have two future API's (DX12 and Vulkan) which benefit a lot from how AMD do things in their Architectures.

If games are programmed to use the full DX12 API's for example, they will use Async shaders, which is AMD only as of right now. Hence why in the Oxide benchmarks, you have a 290X trading blows with a 980Ti

TL;DR

Nvidia has wonderful DX11 performance due their Serial Architecture

AMD has wonderful DX12 performance, due their Parallel Architecture

Why did you decide to buy G-Sync monitor +9months before you can actually use the main feature?

I bought the XB27HU, its' the best IPS monitor right now. I'm using the 144Hz right now and the IPS (Came from an old TN monitor)

The G-sync upgrade later on would just be a bonus. I couldn't splurge money for a high end GPU and a monitor at the same time. I decided to do it on a phased roll-out[/QUOTE]

Thanks for this, makes a lot more sense. So basically Nvidia have banked on another architecture that is counter productive to the way DX12 works.

shiftplusone

Member

Either they are a couple driver updates away from Async Compute support, or they expect this feature to be only relevant when Pascal is out.

Regardless, this has not hurt them (or current Nvidia users) any.

Is this "correctable" in a driver update or does it require completely new hardware?

Portugeezer

Member

Some guys mocked people for using asynchronous compute in an argument about PS4 performance in the past here on GAF and multiple times. If true, it deserves a pretty big wall of shame. Would be hilarious.

But wait. I don't want to be involved in any of this. Leave me alone.

Tomorrow Children uses async computing and that gave them a ~20% performance boost.

Dictator93

Member

I still think the OP and title are inaccurate. Heck, the dev does not even know about Maxwell 2 and there is competing information.

BriareosGAF

Member

If you were exposed to AMD's advertising efforts you would know about their support for Async shaders since February.

There is no such thing as an async shader, there is an async pipe/ring (generally exposed as an 'async device') upon which compute shaders run.

These threads, so painful.

From the quotes in the OP:

Why is Nvidia even reporting this feature being functional and then asking the developer to manually disable it again? What a mess.

the only 'vendor' specific code is for Nvidia where we had to shutdown async compute. By vendor specific, I mean a case where we look at the Vendor ID and make changes to our rendering path. Curiously, their driver reported this feature was functional but attempting to use it was an unmitigated disaster in terms of performance and conformance so we shut it down on their hardware.

We disabled it at the request of Nvidia, as it was much slower to try to use it then to not.

Why is Nvidia even reporting this feature being functional and then asking the developer to manually disable it again? What a mess.

Why is Nvidia even reporting this feature being functional and then asking the developer to manually disable it again? What a mess.

*shrug* this isn't a particularly rare. Intel drivers have been guilty of this. I wouldn't be surprised that this has happened with AMD drivers too.

Is this the reason amd is much better at bitcoin mining?

This may contribute, but more importantly AMD GPUs had shifting and integer operations that Nvidia cards did not have until Maxwell. You should note that even pre-GCN AMD cards were faster than Nvidia cards. These operations made them much more suitable for hashing algorithms; AMD cards were often used in brute force password cracking for a quite a while.

From the quotes in the OP:

Why is Nvidia even reporting this feature being functional and then asking the developer to manually disable it again? What a mess.

Nvidia also reported the 970 as a 4gB GPU

Lemonte

Member

Do I have to start regretting buying a GTX 970 a few months back?

I'm starting to and my 970 isn't even 2 weeks old.

derExperte

Member

Well, it wouldn't exactly be a surprise that Nvidia got fat on their success and missed the boat on something important.

Has happened a few times over the years but AMD could never profit in a meaningful way so I'm not holding my breath but everything that helps them is good for us imo.

Hmm now I think about it, could be drivers, as Nvidia cards have their own queue engine.Is this "correctable" in a driver update or does it require completely new hardware?

(someone more knowledgeable correct me)

edit: Mahigan from Hardforum theorized that Maxwell 2 AWS does not function independently out of order with error checking.

Doesn't async timewarp use this in Oculus Rift..?

Don't mix async timewarp [an engine rendering technique for sampling last user posistion as late as possible] with async compute [taking advantage from multiple hardware pipelines heading to the Compute Units and filling the "empty spots" in the rendering pipeline with more tasks]. AMD intentionally increased the number of ACE pipelines in GCN 1.2, so devs can extract full performance from their cards [esentially, all DX9/11 cards we used for years have wasted a lot of their performance].

First implementation of Async Compute in Tommorow Children on PS4 enabled bunching up of GPU tasks into the tighter schedule that was previously full of holes:

18% increase of performance just from going from traditional rendering pipeline to taking advantage of radeon ACEs [and that's just in a first implementation of the tech].

The version of GCN that Xbone uses have 4x less ACEs, and I think that Gforce cards have similar sparse setup.

edit - video explanation of the asynchronous compute

https://www.youtube.com/watch?v=v3dUhep0rBs

Portugeezer

Member

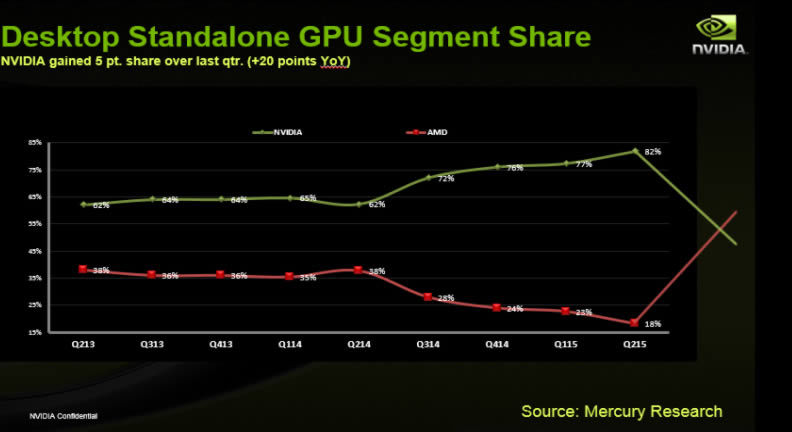

Nvidia at 82% share or something atm.

It's going to be an evil GPU monopoly.

Doubtful. Nvidia has the marketing monies and AMD does not. AMD is going to have to have multiple games that trounce Nvidia's Pascal performance for a market shift like that. They may get a slight up-tick if some people lose their shit and decide to bank on what could be rather than what is.

dr. apocalipsis

Banned

Best DX12 improvements still being draw calls and memory management enhancements, so every GPU including Fermi or GNC 1.0 will enjoy the DX12 boost in performance.

That aside, Nvidia GPUs being bad at switching between compute and graphical tasks is old news. We all have suffered FPS tanking when activating Physx even on high end GPUs.

That aside, Nvidia GPUs being bad at switching between compute and graphical tasks is old news. We all have suffered FPS tanking when activating Physx even on high end GPUs.

justsomeguy

Member

Tough call then... Dx11 still the key performance requirement today. How long until async compute is too important to be ignored & dx11 support has faded?

Foutrologue

Banned

ASYNC Compute is part of DX12 so every GPU "supports" it even if it's not implemented in hardware which is the case with NVIDIA's current GPUs. To quote REDLYNX's Sebbi:

https://forum.beyond3d.com/posts/1868942/

So AMD GPU: HW Support for Async Compute

NVIDIA GPU: Async Compute code will run single thread

https://forum.beyond3d.com/posts/1868942/

It is practically the same that happens on CPUs. You can create any amount of threads (queues) even if your CPU (GPU) just has a single core (runs a single command stream). If more threads (queues) are active at the same time than are supported by the hardware, they will be periodically context switched.

So AMD GPU: HW Support for Async Compute

NVIDIA GPU: Async Compute code will run single thread

"Our belief is that by the middle of the PlayStation 4 console lifetime, asynchronous compute is a very large and important part of games technology." - Mark Cerny

More reading material from few months back:

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

http://wccftech.com/amd-improves-dx12-performance-45-gpu-asynchronous-compute-engines/

We are just about to enter the "middle of the PlayStation 4 console lifetime", and with the big help from AMD, low-level APIs will arrive at the same time on PC.

More reading material from few months back:

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

http://wccftech.com/amd-improves-dx12-performance-45-gpu-asynchronous-compute-engines/

We are just about to enter the "middle of the PlayStation 4 console lifetime", and with the big help from AMD, low-level APIs will arrive at the same time on PC.

dragonelite

Member

ASYNC Compute is part of DX12 so every GPU "supports" it even if it's not implemented in hardware which is the case with NVIDIA's current GPUs. To quote REDLYNX's Sebbi:

https://forum.beyond3d.com/posts/1868942/

So AMD GPU: HW Support for Async Compute

NVIDIA GPU: Async Compute code will run single thread

So you can just program async, with a penalty on nvidia hardware?

Maybe the performance gains can nullify the performance penalty.

Foutrologue

Banned

"Our belief is that by the middle of the PlayStation 4 console lifetime, asynchronous compute is a very large and important part of games technology." - Mark Cerny

More reading material from few months back:

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

http://wccftech.com/amd-improves-dx12-performance-45-gpu-asynchronous-compute-engines/

We are just about to enter the "middle of the PlayStation 4 console lifetime", and with the big help from AMD, low-level APIs will arrive at the same time on PC.

More up to date is UBI Soft/ REDLYNX's Siggraph presentation.

http://advances.realtimerendering.c...iggraph2015_combined_final_footer_220dpi.pptx

Tough call then... Dx11 still the key performance requirement today. How long until async compute is too important to be ignored & dx11 support has faded?

Most obvious gain of DX12 is decoupling DX11 CPU processing from old singlethreaded-focus into full multithreaded approach. After that, devs get full controll over GPU and memory they are accessing, including features such as async compute.

DX11 is here to stay because it is easy, but DX12 will be there for studios [or engines] who are focused on performance [pls Ubi, switch AC games [even old ones] to DX12 ASAP].

icecold1983

Member

the future doesnt look good for nvidia. even without this amd has been catching up quickly in performance as more and more games are designed around gcn because of consoles.

please no.

Most obvious gain of DX12 is decoupling DX11 CPU processing from old singlethreaded-focus into full multithreaded approach. After that, devs get full controll over GPU and memory they are accessing, including features such as async compute.

DX11 is here to stay because it is easy, but DX12 will be there for studios [or engines] who are focused on performance [pls Ubi, switch AC games [even old ones] to DX12 ASAP].

please no.

People wondering why Nvidia is doing a bit better in DX11 than DX12. Thats because Nvidia optimized their DX11 path in their drivers for Ashes of the Singularity. With DX12 there are no tangible driver optimizations because the Game Engine speaks almost directly to the Graphics Hardware. So none were made. Nvidia is at the mercy of the programmers talents as well as their own Maxwell architectures thread parallelism performance under DX12. The Devellopers programmed for thread parallelism in Ashes of the Singularity in order to be able to better draw all those objects on the screen. Therefore what were seeing with the Nvidia numbers is the Nvidia draw call bottleneck showing up under DX12. Nvidia works around this with its own optimizations in DX11 by prioritizing workloads and replacing shaders. Yes, the nVIDIA driver contains a compiler which re-compiles and replaces shaders which are not fine tuned to their architecture on a per game basis. NVidias driver is also Multi-Threaded, making use of the idling CPU cores in order to recompile/replace shaders. The work nVIDIA does in software, under DX11, is the work AMD do in Hardware, under DX12, with their Asynchronous Compute Engines.

I like this quote, where Nvidia's drivers gave them big boosts before, they're losing in DX12 because drivers aren't nearly as important.

Pretty much what AMD has been on about for the past 3 years. While Nvidia focused on the market as-is, AMD was getting in early on DX12 and it's cost them dearly in their already low market share. Now that DX12 is the current focus, I'm sure Pascal won't have these problems and it honestly can't if they want to compete. When Pascal comes around, we'll probably start seeing the first DX12 games hit the market and if their top-tier card is competing or even winning, that's all anyone is going to be talking about, not Maxwell performance.

I like this quote, where Nvidia's drivers gave them big boosts before, they're losing in DX12 because drivers aren't nearly as important.

Pretty much what AMD has been on about for the past 3 years. While Nvidia focused on the market as-is, AMD was getting in early on DX12 and it's cost them dearly in their already low market share. Now that DX12 is the current focus, I'm sure Pascal won't have these problems and it honestly can't if they want to compete. When Pascal comes around, we'll probably start seeing the first DX12 games hit the market and if their top-tier card is competing or even winning, that's all anyone is going to be talking about, not Maxwell performance.

Not sure, even UE4 doesn't seem to support it (except for X1)Tough call then... Dx11 still the key performance requirement today. How long until async compute is too important to be ignored & dx11 support has faded?

icecold1983

Member

People wondering why Nvidia is doing a bit better in DX11 than DX12. Thats because Nvidia optimized their DX11 path in their drivers for Ashes of the Singularity. With DX12 there are no tangible driver optimizations because the Game Engine speaks almost directly to the Graphics Hardware. So none were made. Nvidia is at the mercy of the programmers talents as well as their own Maxwell architectures thread parallelism performance under DX12. The Devellopers programmed for thread parallelism in Ashes of the Singularity in order to be able to better draw all those objects on the screen. Therefore what were seeing with the Nvidia numbers is the Nvidia draw call bottleneck showing up under DX12. Nvidia works around this with its own optimizations in DX11 by prioritizing workloads and replacing shaders. Yes, the nVIDIA driver contains a compiler which re-compiles and replaces shaders which are not fine tuned to their architecture on a per game basis. NVidias driver is also Multi-Threaded, making use of the idling CPU cores in order to recompile/replace shaders. The work nVIDIA does in software, under DX11, is the work AMD do in Hardware, under DX12, with their Asynchronous Compute Engines.

I like this quote, where Nvidia's drivers gave them big boosts before, they're losing in DX12 because drivers aren't nearly as important.

Pretty much what AMD has been on about for the past 3 years. While Nvidia focused on the market as-is, AMD was getting in early on DX12 and it's cost them dearly in their already low market share. Now that DX12 is the current focus, I'm sure Pascal won't have these problems and it honestly can't if they want to compete. When Pascal comes around, we'll probably start seeing the first DX12 games hit the market and if their top-tier card is competing or even winning, that's all anyone is going to be talking about, not Maxwell performance.

pascal doesnt seem to be a new architecture from the info available. its a maxwell 2 derivative with hbm and fp 16 support according to all current information.

People wondering why Nvidia is doing a bit better in DX11 than DX12. Thats because Nvidia optimized their DX11 path in their drivers for Ashes of the Singularity. With DX12 there are no tangible driver optimizations because the Game Engine speaks almost directly to the Graphics Hardware. So none were made. Nvidia is at the mercy of the programmers talents as well as their own Maxwell architectures thread parallelism performance under DX12. The Devellopers programmed for thread parallelism in Ashes of the Singularity in order to be able to better draw all those objects on the screen. Therefore what were seeing with the Nvidia numbers is the Nvidia draw call bottleneck showing up under DX12. Nvidia works around this with its own optimizations in DX11 by prioritizing workloads and replacing shaders. Yes, the nVIDIA driver contains a compiler which re-compiles and replaces shaders which are not fine tuned to their architecture on a per game basis. NVidias driver is also Multi-Threaded, making use of the idling CPU cores in order to recompile/replace shaders. The work nVIDIA does in software, under DX11, is the work AMD do in Hardware, under DX12, with their Asynchronous Compute Engines.

I like this quote, where Nvidia's drivers gave them big boosts before, they're losing in DX12 because drivers aren't nearly as important.

Pretty much what AMD has been on about for the past 3 years. While Nvidia focused on the market as-is, AMD was getting in early on DX12 and it's cost them dearly in their already low market share. Now that DX12 is the current focus, I'm sure Pascal won't have these problems and it honestly can't if they want to compete. When Pascal comes around, we'll probably start seeing the first DX12 games hit the market and if their top-tier card is competing or even winning, that's all anyone is going to be talking about, not Maxwell performance.

The problem with Pascal is this:

A GPU Architecture takes around 3-4 from planning to fruition.

I honestly want to believe that Nvidia had the vision 4 years prior of seeing they need to go Parallel.

DX12 started being talked last year, this year it was released. Vulkan comes next year, so does Pascal.

I'm not that convinced that Nvidia is ready

dr. apocalipsis

Banned

People painting an obscure future for Nvidia is funny, because even on that biased Ashes of the Singularity benchmark, geared towards AMD hardware even when the engine it's based on doesn't shows that strange behaviour, still sees better performance on Nvidia side.

Usual AMD forums fud.

Usual AMD forums fud.

Coulomb_Barrier

Member

"Our belief is that by the middle of the PlayStation 4 console lifetime, asynchronous compute is a very large and important part of games technology." - Mark Cerny

More reading material from few months back:

http://www.anandtech.com/show/9124/amd-dives-deep-on-asynchronous-shading

http://wccftech.com/amd-improves-dx12-performance-45-gpu-asynchronous-compute-engines/

We are just about to enter the "middle of the PlayStation 4 console lifetime", and with the big help from AMD, low-level APIs will arrive at the same time on PC.

Based Cerny.

IIRC it was 290X versus 980Ti which is two grades above and twice the pricePeople painting an obscure future for Nvidia is funny, because even on that biased Ashes of the Singularity benchmark, geared towards AMD hardware even when the engine it's based on doesn't shows that strange behaviour, still sees better performance on Nvidia side.

Usual AMD forums fud.

Then again, Fury didn't shine so more data is indeed needed.

IIRC it was 290X versus 980Ti which is two grades above and twice the price

Then again, Fury didn't shine so more data is indeed needed.

NumberThree

Member

There is no such thing as an async shader, there is an async pipe/ring (generally exposed as an 'async device') upon which compute shaders run.

These threads, so painful.

AMD doesn't agree

dr. apocalipsis

Banned

That's the Oxide Dev, is the company aligned with AMD?

This pic should be on OP, imo.

Any reason for Nvidia not to switch to parallel architecture for Pascal onwards? By the time a meaningful amount of DX12 games are out (2~3 years maybe?) I guess most people will probably be in for a GPU upgrade anyway. And maybe I'm missing something but I can't see any massive differences in the benchmark graphs; I only skimmed through the computerbase article tho.

Nostremitus

Member

Why would Nvidia do this? why are they being dumb.

Nvidia has the marketshare and the money to focus on what works best "now" then release something different when it's needed. AMD doesn't have that luxury. They have to aim at future proofing because they don't have the R&D budget to constantly adapt like Nvidia. So, when they release a new microarchitecture, it's aimed a few year ahead and may not play well right off the bat with what is currently popular. This also means that when AMD has a misstep, they are stuck with that mistake for years (as with their CPUs.)

Should I throw my 970 in the garbage and put my 7950 back in?

yes

Dictator93

Member

Nvidia has the marketshare and the money to focus on what works best "now" then release something different when it's needed. AMD doesn't have that luxury. They have to aim at future proofing because they don't have the R&D budget to constantly adapt like Nvidia. So, when they release a new microarchitecture, it's aimed a few year ahead and may not play well right off the bat with what is currently popular. This also means that when AMD has a misstep, they are stuck with that mistake for years (as with their CPUs.)

I am pretty sure NVs research budget is not about "the now" and AMDs "is about the future". The world is more diverse than the picture you paint. Because if you look at any other data point than async compute (which still has not even been proven that Maxwell 2 does not support it) you would see NV also has hardware features which are "future oriented".

dragonelite

Member

Should I throw my 970 in the garbage and put my 7950 back in?

No, you should send the 970 my way

Coulomb_Barrier

Member

This pic should be on OP, imo.

What does this prove, exactly?

thequickandthedead

Member

Why did you decide to buy G-Sync monitor +9months before you can actually use the main feature?

Pardon my ignorance but what main feature are you talking about? I thought G-Sync monitors just eliminated screen-tearing by syncing your GPU to the monitor's refresh rate?

Poetic.Injustice

Member

What does this prove, exactly?

TEH BIAS.

icecold1983

Member

I am pretty sure NVs research budget is not about "the now" and AMDs "is about the future". The world is more diverse than the picture you paint. Because if you look at any other data point than async compute (which still has not even been proven that Maxwell 2 does not support it) you would see NV also has hardware features which are "future oriented".

they dont seem to be nearly as beneficial as async compute. also, consoles not supporting them pretty much makes them useless. you will see the occasional 2 titles a year use them to do almost nothing to enhance the visuals. they will probably become the new tessellation.

This pic should be on OP, imo.