Dictator93

Member

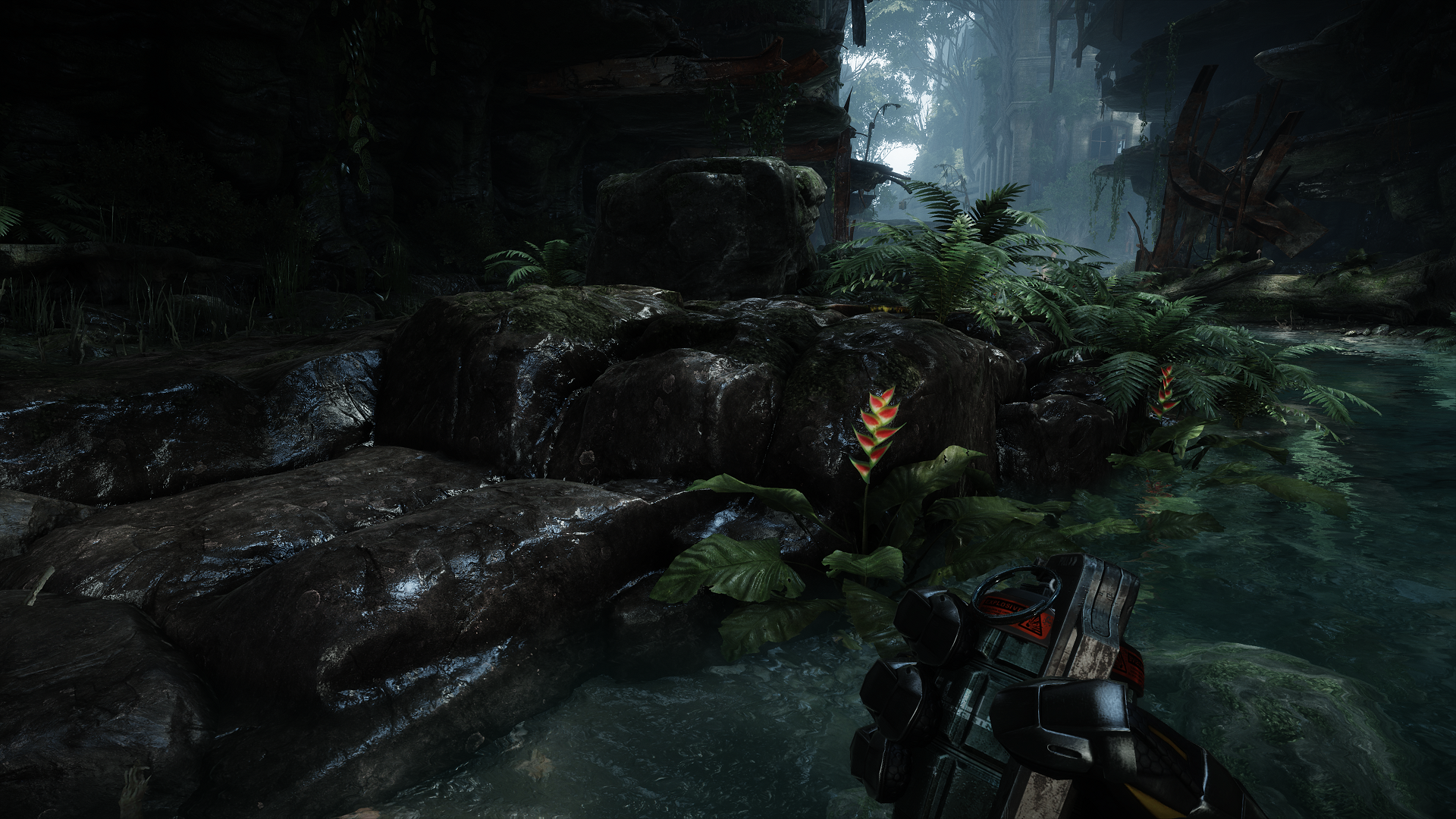

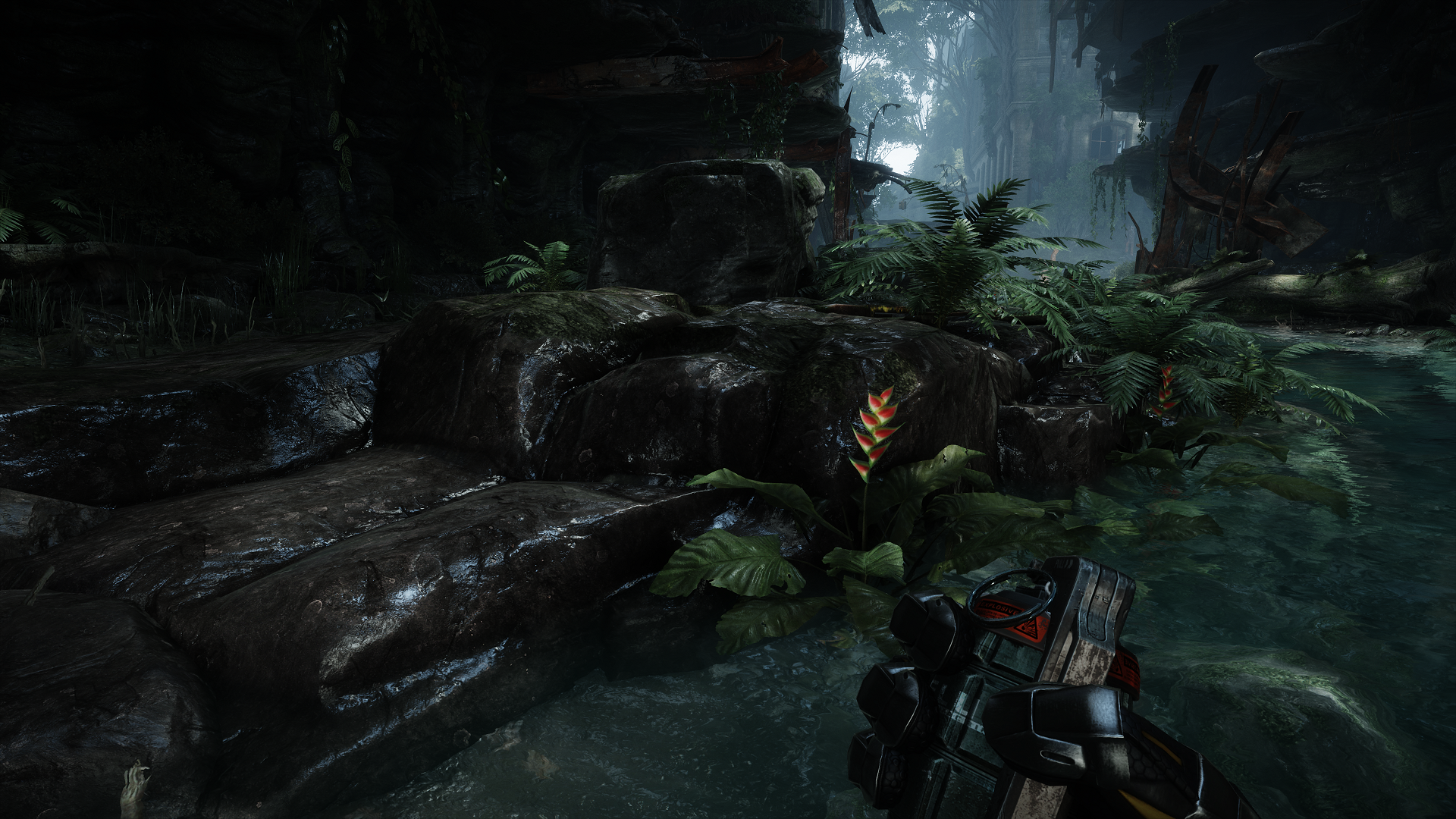

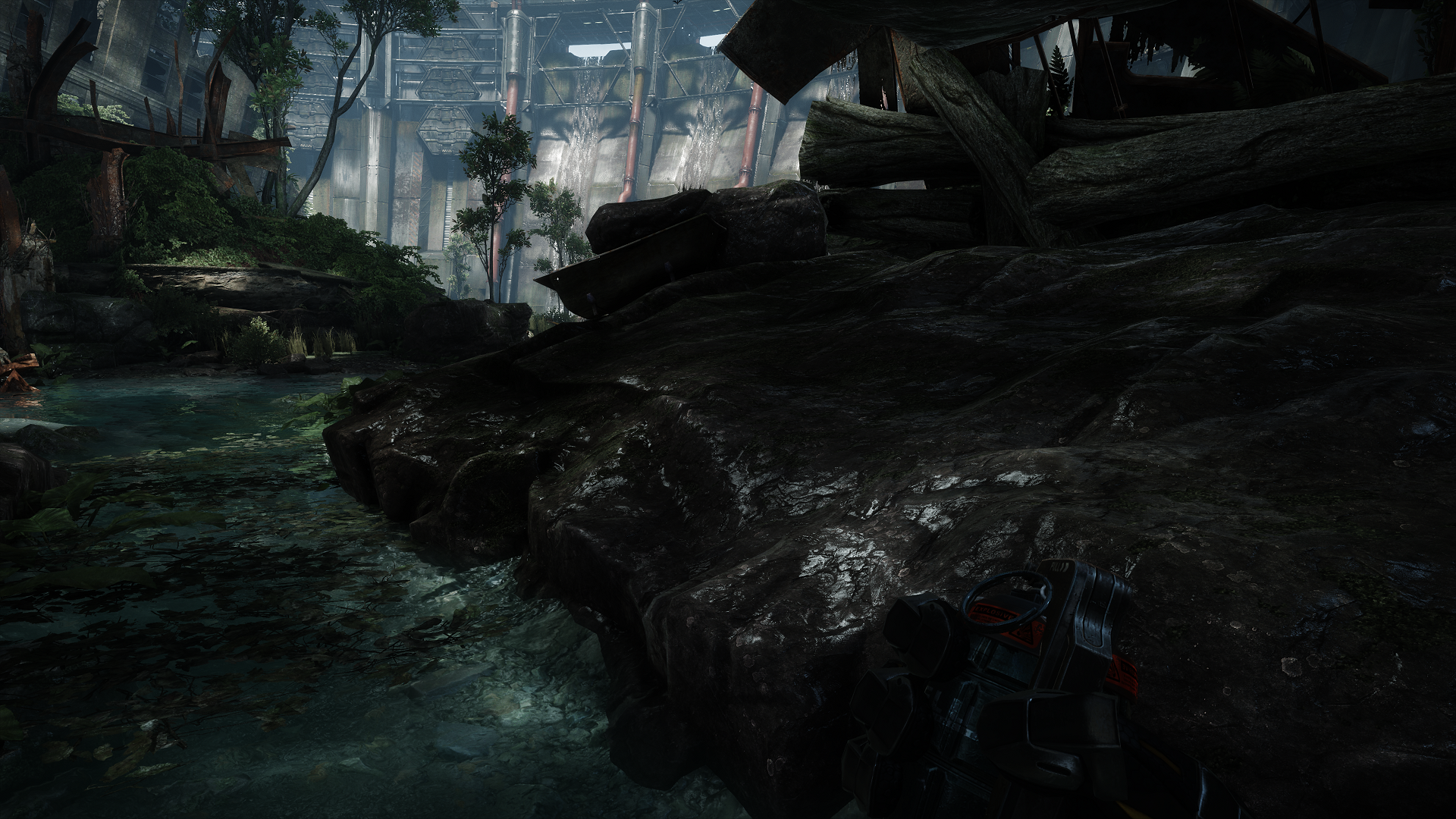

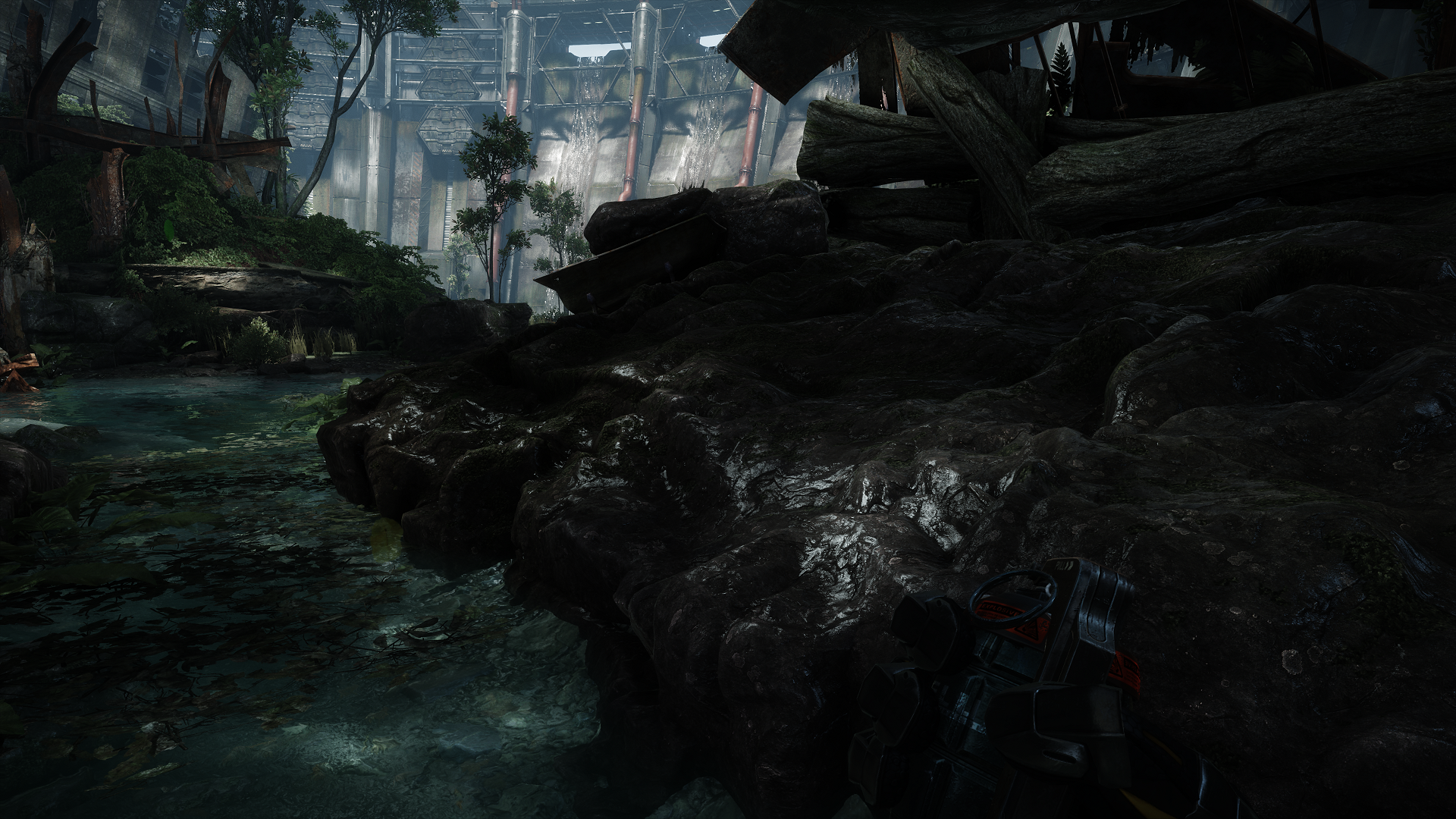

Not seeing the difference in these shots to be quite honest, the curves don't seem massively impacted by tessellation. Are there specific points in any of those images that look substantially worse? I mean the usual candidates (perfect circles) like the weapon sights or the curves on that big rock seem identical. Crysis 3 is a bit of a pain in the arse for showing this anyway as the screenspace effects like CA are completely washing out details.

Surely you opened them in tabs and switched between them?

the first and last comparisons show the biggest diff, but those pictures do more to hurt his argument than mine imo

Would you say the non-rounded and non-displaced geometry looks better?

1 single graphical effect is rarely huge enough to single change every single pixel of the entire image. And expecting that of tesselation is unrealistic given what it does (round or displace). What makes these differences not signifcant and/or work against my argument?

In that case, the differences between console and PC versions is insignificant always because rarely does a dev make an entirely different renderer, assets catalogue, and otherwise for PC hardware.