justsomeguy

Member

Basically for any issue like this, go read what sebbbi says and ignore everyone else. He understands the tech and doesn't have any technology bias that I've noticed.

It will be interesting to see how some people here will try to discredit Sebastian now.

Yep. I'm not going to pretend im super educated about this stuff, but I know enough that there's not enough info available right now, particularly with actual games being built with DX12 in mind to be worried about it at this point.

My current most anticipated games for PC are Just Cause 3 and Deus Ex Mankind Divided. They're both Square Enix games so they have an AMD marketing deal. Deus Ex will even use DirectX 12.

Should I expect these to perform much better on an AMD card, or at least the DX12-enabled Deus Ex?

First of all, there is no need for personal attacks.

A CPU is a processor that consists of a small number of big processing cores. A GPU is a processor that consists of a very huge number of small processing cores. Therefore most home PCs have multiple processors. "APU" is a marketing term for a single processor that consists of different kinds of processing cores. In the case of the PS4, the APU has two Jaguar modules with four x86 cores each and 18 GCN compute units with 64 shader cores each. You distinguish processors as the following: Single core (like Intel Pentium), multi core (Intel Core i7 or any GPU), hetero core (APUs like the one in PS4) and cloud core (Microsoft Azure for example).

If you take a look back, the evolution of computer technology was always about maximum integration. The reason for that you is want to minimize latency as much as possible. A couple of years ago, GPUs only had fixed-function hardware. That means that every core of the GPU was specialized for a certain task. That changed with the so called unified shader model. Today, the shader cores of a modern GPU are freely programmable. Just think of them as extremely stupid CPU cores. The advantage of a freely programmable GPU, however, is that you have thousands of those cores. The PS4 has 1156 shader cores. That makes a GPU perfectly suited for tasks that benefit from mass parallelization like graphics rendering. You can also utilize them for general purpose computations (GPGPU) which, in theory, opens up a whole new world of possibilities since the brute force of a GPU is much higher than the computational power of a traditional CPU. In practice, however, the possibilities of GPGPU are limited by latency.

If you want to do GPGPU on a traditional gaming PC, you have to copy your data from your RAM pool over the PCIe to your VRAM pool. The process of copying costs latency. A roundtrip from CPU -> GPU -> CPU usually takes so long that the performance gain from utilizing the thousands of shader cores gets immediately eaten up by the additional latency: Even if the GPU is much faster at solving the task than the CPU, the process of copying the data back and forth will make the GPGPU approach slower than letting the CPU do it on its own. That's the reason why GPGPU today is only used for things that don't need to be send back to the CPU. The possibilities on a traditional PC are very limited.

The next step in integration is the so-called hetero core processor. You integrate the CPU cores as well as the GPU shader cores on a single processor die and give them one unified RAM pool to work with. That will allow you to get rid of that nasty copy overhead. Till this day, the PS4 has the most powerful hetero core processor (2 TFLOPS @ 176GB/s) available. Not only that, since the APU in PS4 was built for async compute (see Cerny interviews), it can do GPGPU without negatively affecting graphics rendering performance. It's a pretty awesome system architecture, if you want my opinion.

The only problem is, that PC gamers don't have a unified system architecture. The developers of multiplatform engines have to consider that fact. 1st-party console devs can fully utilize the architecture, though.

You got me with the coding for GDDR5 sentence.

Somebody should put it as your status.

I do wonder though, do you work for Sony's PR team?First of all, there is no need for personal attacks.

A CPU is a processor that consists of a small number of big processing cores. A GPU is a processor that consists of a very huge number of small processing cores. Therefore most home PCs have multiple processors. "APU" is a marketing term for a single processor that consists of different kinds of processing cores. In the case of the PS4, the APU has two Jaguar modules with four x86 cores each and 18 GCN compute units with 64 shader cores each. You distinguish processors as the following: Single core (like Intel Pentium), multi core (Intel Core i7 or any GPU), hetero core (APUs like the one in PS4) and cloud core (Microsoft Azure for example).

If you take a look back, the evolution of computer technology was always about maximum integration. The reason for that you is want to minimize latency as much as possible. A couple of years ago, GPUs only had fixed-function hardware. That means that every core of the GPU was specialized for a certain task. That changed with the so called unified shader model. Today, the shader cores of a modern GPU are freely programmable. Just think of them as extremely stupid CPU cores. The advantage of a freely programmable GPU, however, is that you have thousands of those cores. The PS4 has 1156 shader cores. That makes a GPU perfectly suited for tasks that benefit from mass parallelization like graphics rendering. You can also utilize them for general purpose computations (GPGPU) which, in theory, opens up a whole new world of possibilities since the brute force of a GPU is much higher than the computational power of a traditional CPU. In practice, however, the possibilities of GPGPU are limited by latency.

If you want to do GPGPU on a traditional gaming PC, you have to copy your data from your RAM pool over the PCIe to your VRAM pool. The process of copying costs latency. A roundtrip from CPU -> GPU -> CPU usually takes so long that the performance gain from utilizing the thousands of shader cores gets immediately eaten up by the additional latency: Even if the GPU is much faster at solving the task than the CPU, the process of copying the data back and forth will make the GPGPU approach slower than letting the CPU do it on its own. That's the reason why GPGPU today is only used for things that don't need to be send back to the CPU. The possibilities on a traditional PC are very limited.

The next step in integration is the so-called hetero core processor. You integrate the CPU cores as well as the GPU shader cores on a single processor die and give them one unified RAM pool to work with. That will allow you to get rid of that nasty copy overhead. Till this day, the PS4 has the most powerful hetero core processor (2 TFLOPS @ 176GB/s) available. Not only that, since the APU in PS4 was built for async compute (see Cerny interviews), it can do GPGPU without negatively affecting graphics rendering performance. It's a pretty awesome system architecture, if you want my opinion.

The only problem is, that PC gamers don't have a unified system architecture. The developers of multiplatform engines have to consider that fact. 1st-party console devs can fully utilize the architecture, though.

Except the little difference that Tessellation wasn't available in DX10 at all, in contrast to Async Compute in DX12.This is like saying Tessellation was a key feature of DX10 because many cards of the era were able to support it, but it wasn't a requisite of DX set until DX11.

Who knows, maybe Horse Armour is right and it becomes a requisite for DX13.

I would claim it is.Interesting - so maybe the XBox's 2 ACE units are fine after all, if I've understood correctly.

I think for most cases they did.It's too early to judge the value of Sony's additional resources. They didn't pack 8 ACEs and 64 queues for no reason.

He's not necessarily wrong. The access granularity of memory becomes bigger with HBM, which is affecting which code is ideal.Sweet lord...

This is a very wrong speculation.Beyond3D have gotten more results, and it Async Computing in Maxwell 2 is "supported" through the Driver offloading the Computing calculations to the CPU and back, hence the huge delay added, and why it was faster for Oxide t

I remember that avatar, I think he was banned like a year ago, at that time he was following Pope Cerny and the hUMA church.It seems he made a new account.

I do wonder though, do you work for Sony's PR team?

Except the little difference that Tessellation wasn't available in DX10 at all, in contrast to Async Compute in DX12.

Actually it is a requisite in DX12, but there is no requisite how to implement it.

Kollock said:I don't believe there is any specific requirement that Async Compute be required for D3D12, but perhaps I misread the spec.

He's not necessarily wrong. The access granularity of memory becomes bigger with HBM, which is affecting which code is ideal.

Okay, let's get this out of the way once and for all:

Okay, let's get this out of the way once and for all:

I never received a single ban on NeoGAF.

More than a year ago, there was a small group of NeoGAF users, all of them PC gamers, that were extremely angry about the things I wrote.

No.No, stop this already. That claim IS WRONG.

Andrew Lauritzen said:When someone says that an architecture does or doesn't support "async compute/shaders" it is already an ambiguous statement (particularly for the latter). All DX12 implementations must support the API (i.e. there is no caps bit for "async compute", because such a thing doesn't really even make sense), although how they implement it under the hood may differ. This is the same as with many other features in the API.

Andrew said:From an architecture point of view, a more well-formed question is "can a given implementation ever be running 3D and compute workloads simultaneously, and at what granularity in hardware?" Gen9 cannot run 3D and compute simultaneously, as we've referenced in our slides. However what that means in practice is entirely workload dependent, and anyone asking the first question should also be asking questions about "how much execution unit idle time is there in workload X/Y/Z", "what is the granularity and overhead of preemption", etc. All of these things - most of all the workload - are relevant when determining how efficiently a given situation maps to a given architecture.

Without that context you're effectively in making claims like 8 cores are always better than 4 cores (regardless of architecture) because they can run 8 things simultaneously. Hopefully folks on this site understand why that's not particularly useful.

... and if anyone starts talking about numbers of hardware queues and ACEs and whatever else you can pretty safely ignore that as marketing/fanboy nonsense that is just adding more confusion rather than useful information.

I can't follow you here. The context was optimized code for GDDR5 and HBM on Fury. (although I believe HBM1 from Hynix has the same granularity like GDDR5, only later on they will double on this).Nothing to do. It is related to GCN being geometry limited, thing that Nvidia exploited. Some time ago, I remember explaining in this forum how GPUs can't perfectly scale just by adding shader processors because they would hit some other architectural bottlenecks. This is one of those cases. Of course, many enthusiastic users reacted against me because it was against their agenda.

Andrews comment is pretty valuable thanks for sharing.Another valuable thing Andrew is stating:

I can't follow you here. The context was optimized code for GDDR5 and HBM on Fury. (although I believe HBM1 from Hynix has the same granularity like GDDR5, only later on they will double on this).

Yes, the scheduling is non-trivial and not really something an application can do well either, but GCN tends to leave a lot of units idle from what I can tell, and thus it needs this sort of mechanism the most. I fully expect applications to tweak themselves for GCN/consoles and then basically have that all undone by the next architectures from each IHV that have different characteristics. If GCN wasn't in the consoles I wouldn't really expect ISVs to care about this very much. Suffice it to say I'm not convinced that it's a magical panacea of portable performance that has just been hiding and waiting for DX12 to expose it.

I remember that avatar, I think he was banned like a year ago, at that time he was following Pope Cerny and the hUMA church.It seems he made a new account.

Allow me to be nitpicky.dr. apocalipsis is still arguing that multi-engine ergo ACE are not a DX12 specification and people still have not put him on ignore. What is the world coming to..

No, I agree, it shouldn't be in that case.ALso, I am pretty sure the differences between Gen 1 HBM and GDDR5 should not be the reasons why FUry X is performing only a bit better than A 290X in the benchmark. I do not think there is such a large distinction between the two... as was made above. NAmely you have to code differently for higher bandwidth...

No.

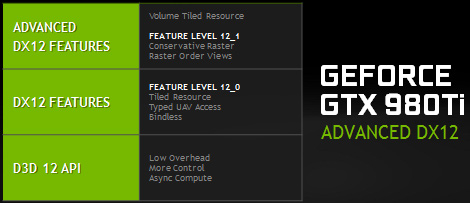

Look how Nvidia themself see it:

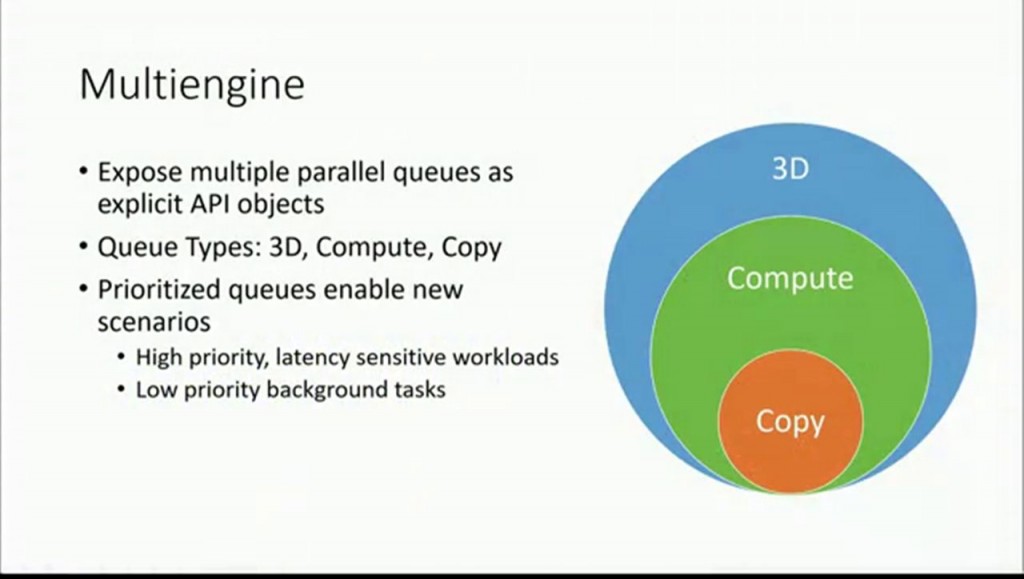

Multi-Engine is an API, part of DX12, which every vendor must support.

That's a statement also Andrew Lauritzen did from Intel:

... and if anyone starts talking about numbers of hardware queues and ACEs and whatever else you can pretty safely ignore that as marketing/fanboy nonsense that is just adding more confusion rather than useful information.

I can't follow you here. The context was optimized code for GDDR5 and HBM on Fury. (although I believe HBM1 from Hynix has the same granularity like GDDR5, only later on they will double on this).

dr. apocalipsis is still arguing that multi-engine ergo ACE are not a DX12 specification and people still have not put him on ignore. What is the world coming to..

I'm so confused. Are nVidia GPU's gimped when it comes to Async Computer or not??

I see. CAN they make a software workaround or are we just assuming they could?All this time NVIDIA has been bypassing DX10/11 limits through their propietary GPGPU solution CUDA. Now DX12 is standarizing all this compute stuff and we still have to know if Nvidia will be able to accomodate their current architectures to this new standard efficiently.

We know Nvidia has been pushing GPGPU since G80 era. We know Maxwell 2 has strong compute abilities where little Kepler (GTX700 consumer GPUs) doesn't.

All we need to know is if Nvidia will be able to make Maxwell cards work as DX12 wants or if they will need to make another software workaround. Basically, we are on the dark for now.

Also, what about cards such as my 660?

I'm just wondering if it would ever be capable, period. Bear in mind I use it for 1080p gaming with med/high settings.I'm no expert but I don't foresee your 660 doing very well, and it's decidedly not great at compute at all.

I really need help with your viewpoint.That slide is talking about DX12 features AND their benefits, the ability for Async Compute being a benefit, NOT a requisite. I was about to post that slide yesterday, but I thought devs word would suffice.

What. That's when the video ends

Yikes

http://www.twitch.tv/thetechreport/v/14316298

go to 01:21:05

This seems to explain why Oculus is using AMD gpus on all their demos.

Well, they explicit said that their demos ran on the 980ti (Valve said that too)

I really need help with your viewpoint.

Multi-Engine (Async compute/Async Copy) is like ExecuteIndirect an integral part of DX12.

It's not optional to support it or not, every vendor must support Multi-Engine.

This is a gross misrepresentation of what happened. You pretty much went off the rails at the end and ruined your already flimsy reputation with posts like this. That infamous Crysis thread was a mess too thanks in large parts to you. Trying to put the blame solely on others is just pathetic and I urge everyone to be very careful about believing anything W!CKED says. The 'don't attack me, attack my arguments' shtick is just part of the act, many people tried to reason with him back in the day but he'll wiggle around, evade, delete parts of comments he can't counter and post more bullcrap until someone gets fed up. Too bad some are starting to fall for it again.

If you need a prove, try searching for any of old my old posts (username W!CKED). They're all deleted.

Well, this sounds nicer to me.Multi-engine isn't Async compute/Async Copy.

It is the queue and priority structure that allows parallelization. Every DX11 card have it, every DX11 card will benefit from it on DX12. This is what allows Async Compute, but it is not limited to Async compute. This can, for example, allow a background texture streaming with a low level priority.

http://www.overclock.net/t/1569897/various-ashes-of-the-singularity-dx12-benchmarks/2130#post_24379702Kollock said:We actually just chatted with Nvidia about Async Compute, indeed the driver hasn't fully implemented it yet, but it appeared like it was. We are working closely with them as they fully implement Async Compute. We'll keep everyone posted as we learn more.

Also, we are pleased that D3D12 support on Ashes should be functional on Intel hardware relatively soon, (actually, it's functional now it's just a matter of getting the right driver out to the public).

http://www.overclock.net/t/1569897/various-ashes-of-the-singularity-dx12-benchmarks/2130#post_24379702

Curious to see what "fully implement Async Compute" actually means for Nvidia.

And I'm also very interested in the Intel results.

Well isn't that something.

Well isn't that something.

Yikes

http://www.twitch.tv/thetechreport/v/14316298

go to 01:21:05 (roughly, hang around for a bit)

This seems to explain why Oculus is using AMD gpus on all their demos.

Sounds like damage control to me

Did anyone actually get rid of their green gpu on the back of this?!

This thread is the fucking example why we can't have any real discussion about consoles capabilities here on GAF. Any positive for consoles is nucked to death by a very vocal minority who distributes personal attacks to anyone talking positively about console architecture.

I mean, seeing DerExperte spreading FUD about users talking here, despite those claims can be returned to him the same way, what the fuck am I reading really. It's gameFAQs level of insanity and it's really burning me down.

I walked by NeoGAF in 2007 coming from B3D. I was mostly interested in console architecture back in the day and games in general. I was frankly a PC gamer at this time and my PS2 was collecting dust. I remember the big flamewars on this board about 360 and PS3, that was very cringeworthy to read. I made an account but failed to see a reason about participating.

This thread proves me I was so right about this. That was a good time to talk about async compute because at this time we don't really know the fuck why PS4 was so designed around that feature. And the OP talks about having performance advantages while using it on consoles. I never implied that async compute would save the consoles or anything like that, or even making PS4 an highend PC or something. Never i was saying that, and i never will. I just can't have a serious discussion about this facet of the OP, some guys are totally allergic to that.

And yet, like that pink panther avatar guy, in every discussion I come I always find me flagged as a console warrior that shouldn't be trusted or something. I mean, seriously? Maybe i'm naive or very enthusiast about my PS4, i don't care. But why attacking me? Why do I represent something to be defended from. Seriously? There is no tech discussion to have here about consoles. I won't regret this shit bath.

I'll play some CIV games instead. (*wink* at the mod banning me last year while saying "go back playing your fantastic PS4 instead" while I was answering to a bunch of angry guys why I like to play on PS4 because reasons in a thread whining about why people like consoles).

Did anyone actually get rid of their green gpu on the back of this?!

Conclusion is that we dont have finalized drivers, so current async is not working as intended on Nvidia GPUs for developers.I just bought a second GTX970. I've read some of the posts in this thread previously. Tbh I don't think I understand the whole situtation, but it doesn't sound that severe to me.

Can anyone link me to a post / article without too much technical gibberish?

Why do you interpret a statement like that as damage control? It wasn't towards a comunity or towards a journalist, but towards a developer and he was just relaying it to us.

If someone did, I would laugh heartidly. Nothing out of this entire situation has pointed toward NV GPU's being difficient (Maxwell 2) at all.

Sorry you feel that way.