Oh my, tell me about my hidden agenda then. Its all part of my plan to ruin CDPR reputation on GAF. And I would, if it wasn't for those meddling kids! /s

Why do screenshots from here, named "The Witcher 3 new gorgeous screens" look like shit and this video looks better. Its a honest question.

People here are quick to call bullshots and bulltrailers(?) but this game gets the blind eye treatment for some reason. Don't just dismiss my point of view because it does not fit your world.

It was explained to you before, but I will do it again: the previous screenshots used the old renderer - the one used in The Witcher 2. This is how game development works - you start with something looking not that fantastic and then improve until you are limited by hardware.

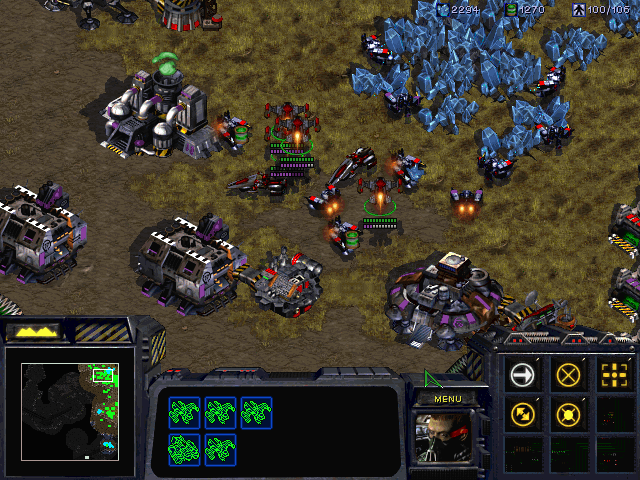

It's like asking why this screenshot from Starcraft looked so shit:

When the final version looked like that: