-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Wii/PS3/Kinect homebrewer rumor: Durango CPU clocked at 1.6ghz.

- Thread starter KojiKnight

- Start date

But after the Core 2 Duos came out, AMD just couldn't really compete anymore other than in the value segment.

I'm not really sure what Intel really did with the Core 2 Duo line... the performance jump was gigantic. Maybe human sacrifices and demon contracts were involved, thus why AMD failed to compete.

dragonelite

Member

So really the whole thread is a bit pointless then?

Jep

Not sure if this will work.

As you can see if you are doing shitty stuff with your cash like not laying out data right the cpu will sleep and do nothing while waiting for data to arrive.

Edit: Forgot to add source

https://docs.google.com/present/view?id=0AYqySQy4JUK1ZGNzNnZmNWpfMzJkaG5yM3pjZA&hl=en&pli=1

But after the Core 2 Duos came out, AMD just couldn't really compete anymore other than in the value segment.

And Apple chose to switch to Intel at that time

Guess AMD and IBM did well because Intel made horrid CPUs.

Apple actually explained pretty well why Ghz doesn't matter that much. It's just a part of the performance of the CPU.

Please note, with a 1Ghz and a 2Ghz CPU that are both otherwise identical the 2Ghz CPU would run twice as fast. AFAIK Intel believed that CPUs would get a higher and higher clockrate when they made the Pentium 4. That didn't happen and the later Pentium 4s were really hot and didn't perform better than cheaper CPUs from AMD (with a lower clockrate).

Thanks for that. I do know a moderate amount about PC tech but it was interesting to see them explain the MHz stuff.

Jep

Not sure if this will work.

As you can see if you are doing shitty stuff with your cash like not laying out data right the cpu will sleep and do nothing while waiting for data to arrive.

Also interesting to see. It will be interesting to see which route MS and Sony take for their next machines.

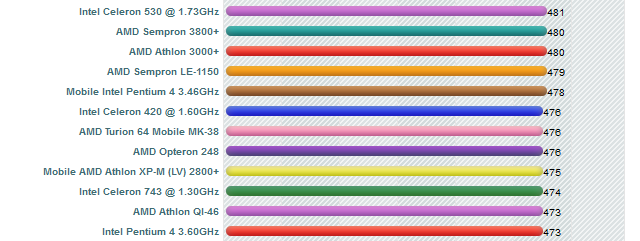

ITT, people think clock speeds have always been increasing. Here's a fun image:

A small collection of low-end (by today's standard) CPU benchmarks.

Look how the 1.30GHz Intel Celeron 743 performs comparably to the 3.60GHz Pentium 4. Both CPUs were released exactly one year apart.

Another fun fact: the Intel Core was derived from the Pentium 3 line, ditching the Pentium 4 architecture. Deriving from an older architecture isn't necessarily a bad thing.

The netburst architecture was a complete dud. I theory it was to be weaker at any given clock rate, but it should compensate with very high clock speeds. Unfortunately, these CPU never reached the clock speed necessary to compensate for the lower performance.

I believe the PPC in the X360 was somewhat similar.

This was however the exception to the rule, most newer CPU design are faster than the one before it, next gen console CPUs are unlikely to differ.

Latest is this as far as I'm aware: http://www.vg247.com/2012/11/17/next-gen-xbox-details-contained-in-last-issue-of-xbox-world-rumor/

4 cores with 4 logical cores.

This would point back to IBM, Power7 architecture does 4 way SMT, and even at 1.6GHz, it would completely demolish both Wii U and last gen consoles.

however, if they went AMD, they have 1 thread per core, guaranteed. As AMD has no SMT technology.

Asherdude

Member

The current Core chips have their roots in the Pentium III which goes back to 1999. So, what's your point again?Yeah, plus the PPC 750 is an old design that has its roots in 1998.

I don't know if they added any new instruction sets, though.

They recognized what a terrible idea the Netburst architecture family was, shitcanned it, and switched base to their (decent) mobile architectures.I'm not really sure what Intel really did with the Core 2 Duo line... the performance jump was gigantic. Maybe human sacrifices and demon contracts were involved, thus why AMD failed to compete.

POWER7 is two-way SMT (and generally overkill - and far from efficient - for a console). A2 is four threads per core.This would point back to IBM, Power7 architecture does 4 way SMT, and even at 1.6GHz, it would completely demolish both Wii U and last gen consoles.

however, if they went AMD, they have 1 thread per core, guaranteed. As AMD has no SMT technology.

So, I guess now its time for Microsoft fanboys (we can still use that term... right?!?) to defend their console. This then sheds light if the WiiU CPU really is that bad... actually scratch that, Devs do say it sucks. Maybe they aren't used to its architecture? If I am correct the WiiU still has that old PPC tech used back in early 00's, while this new CPU from Microsoft should be based on newer, much efficient tech. Clock Speed hasn't mattered shit for the past few years...

My old Core 2 Duo at 1.86GHZ ran faster than a P4HT of 3.2GHz. Even in core by core comparisons. Though its weird console manufactures are going with x86 now.... should be a fun gen.

My old Core 2 Duo at 1.86GHZ ran faster than a P4HT of 3.2GHz. Even in core by core comparisons. Though its weird console manufactures are going with x86 now.... should be a fun gen.

Haunted

Member

*claps*This new carnival of stupid runs on more threads than the next gen systems could dream of.

Projectjustice

Banned

higher clock rate is always better for performance... new architecture or not.

and it's not like this thing is gonna hold a candle against current high-end PC CPUs running in stock mode anyway.

Incorrect.

POWER7 is two-way SMT (and generally overkill - and far from efficient - for a console). A2 is four threads per core.

Thanks for pointing to A2, but Power7 has 4 threads per core. 8Cores with 32Threads and 32MB eDRAM. Maybe I'm just using that four way SMT term wrong.

http://en.wikipedia.org/wiki/POWER7

DonMigs85

Member

The current Core chips have their roots in the Pentium III which goes back to 1999. So, what's your point again?

That may be so, but they still streamlined the pipeline, improved IPC and branch prediction and added new instruction sets like SSE3 and later. Other than being multicore and possibly having more cache we don't know how extensively the Wii U CPU was improved over the basic Gekko/Broadway chip.

That may be so, but they still streamlined the pipeline, improved IPC and branch prediction and added new instruction sets like SSE3 and later. Other than being multicore and possibly having more cache we don't know how extensively the Wii U CPU was improved over the basic Gekko/Broadway chip.

Definitely more cache, just fyi.

Thanks for pointing to A2, but Power7 has 4 threads per core. 8Cores with 32Threads and 32MB eDRAM. Maybe I'm just using that four way SMT term wrong.

http://en.wikipedia.org/wiki/POWER7

You're quite right, Power7 cores are four-way multi-threaded.

Four A2 cores is much more likely for Durango (if the four cores with four threads each claim is true). Incidentally, the A2 cores in BlueGene/Q operate at exactly 1.6GHz, although there wouldn't be anything holding MS to that, as the architecture can be pushed up to around 3GHz.

nhlducks35

Member

I can't tell if some posters are joking, clueless, or stupid.

LiquidMetal14

hide your water-based mammals

People are going to realize that certain things like this will not affect the games looking amazing on PS4/Durango.

I guess it's hard to say something when so many things have to be kept under wraps when these platforms aren't even officially announced yet.

I guess it's hard to say something when so many things have to be kept under wraps when these platforms aren't even officially announced yet.

Just to go into a little more detail, I feel from various (and conflicting) rumours there are three possibilities:

IBM PowerPC A2 based

This fits the four-cores, four-threads-per-core rumour, and the 1.6GHz rumour. The cores are about 6.58mm² on a 45nm process, so on a 32nm process you could fit four cores and 8MB eDRAM cache within 30mm² or so, which is pretty small for a console CPU (about the same die size as Wii U's 45nm "Espresso" CPU). Power draw would be around 10W at 1.6GHz.

AMD Jaguar based

The Jaguar architecture is designed to go up to 2GHz, so 1.6GHz would be a reasonable clock for it in a console environment. It's a single-threaded architecture. At 28nm each core (including 512KB cache) is about 3.2mm². Designed for 2-4 cores, but an eight-core chip would come to about 30mm² or so as well. I can't find data on power draw, but ~10W would probably be a good guess here also.

AMD Bulldozer based

I'm including Piledriver, Steamroller, etc. here. The "eight-core"* Bulldozer is 315mm² at 32nm, which is fucking huge for a console CPU (you'll notice that it's literally ten times the size of eight Jaguar cores). It pulls 125W at its stock speed of 3.6GHz, and if they were using it in Durango they'd have to clock it down massively to prevent it melting the console (possibly even to 1.6GHz). In theory they could use a "four core" variant at about half the size, which would put it at roughly the same size as Xenon was at 90nm, but still be somewhat of a power-hog.

*I put eight-core in quotation marks because they aren't really eight-core chips. They have four modules on-board, and each module is something half-way between a dual-threaded core and two independent cores. A "four-core" variant would then be a dual-module variant, in reality.

There are also the rumours of an Intel chip, but I don't put much faith in it, as the logic seemed to be "It has AVX support, therefore it must be Intel" (not true, both Jaguar and Bulldozer support AVX), and it claimed it was an 8-core chip. Intel's only 8-core chips are extremely expensive Xeon server processors, and the only architecture they could use to cram 8 cores in a console-friendly die is Cedarview (Atom 32nm), which doesn't support AVX.

The other thing you'll notice is how the two most likely scenarios involve very small dies running on very few watts. This comes down to something I talked a bit about in the Wii U technical thread; transistor efficiency:

Replace Nintendo with MS or Sony and the point is still valid. Although their transistor and heat budgets will be much higher, they're still trying to optimise within finite budgets, and with modern GPU technology it makes sense to push the significant majority of transistors and watts to the GPU. Perhaps even more so for MS and Sony, as they're apparently using the more compute-friendly GCN GPU architecture.

IBM PowerPC A2 based

This fits the four-cores, four-threads-per-core rumour, and the 1.6GHz rumour. The cores are about 6.58mm² on a 45nm process, so on a 32nm process you could fit four cores and 8MB eDRAM cache within 30mm² or so, which is pretty small for a console CPU (about the same die size as Wii U's 45nm "Espresso" CPU). Power draw would be around 10W at 1.6GHz.

AMD Jaguar based

The Jaguar architecture is designed to go up to 2GHz, so 1.6GHz would be a reasonable clock for it in a console environment. It's a single-threaded architecture. At 28nm each core (including 512KB cache) is about 3.2mm². Designed for 2-4 cores, but an eight-core chip would come to about 30mm² or so as well. I can't find data on power draw, but ~10W would probably be a good guess here also.

AMD Bulldozer based

I'm including Piledriver, Steamroller, etc. here. The "eight-core"* Bulldozer is 315mm² at 32nm, which is fucking huge for a console CPU (you'll notice that it's literally ten times the size of eight Jaguar cores). It pulls 125W at its stock speed of 3.6GHz, and if they were using it in Durango they'd have to clock it down massively to prevent it melting the console (possibly even to 1.6GHz). In theory they could use a "four core" variant at about half the size, which would put it at roughly the same size as Xenon was at 90nm, but still be somewhat of a power-hog.

*I put eight-core in quotation marks because they aren't really eight-core chips. They have four modules on-board, and each module is something half-way between a dual-threaded core and two independent cores. A "four-core" variant would then be a dual-module variant, in reality.

There are also the rumours of an Intel chip, but I don't put much faith in it, as the logic seemed to be "It has AVX support, therefore it must be Intel" (not true, both Jaguar and Bulldozer support AVX), and it claimed it was an 8-core chip. Intel's only 8-core chips are extremely expensive Xeon server processors, and the only architecture they could use to cram 8 cores in a console-friendly die is Cedarview (Atom 32nm), which doesn't support AVX.

The other thing you'll notice is how the two most likely scenarios involve very small dies running on very few watts. This comes down to something I talked a bit about in the Wii U technical thread; transistor efficiency:

Of course, it's always better to have a more powerful GPU, and it's always better to have a more powerful CPU, but for a company like Nintendo, it's all about transistor efficiency. If they're deciding which component should be running physics code, they're choosing between adding X number of transistors to the CPU (lets say adding in some beefy SIMD units) and adding Y number of transistors to the GPU (lets say more SPUs) to get the same amount of performance. What they'll find is that X > Y, and that it costs more (probably a lot more) transistors to get the same performance out of the CPU as out of the GPU. Hence why they've combined a tiny CPU die with a far, far bigger GPU die, because the GPU's simply giving them more bang for their transistor buck. The same is true with heat/energy usage. They've got a finite (and pretty small) number of watts the system has to be able to run off, and they have to choose which gives them the better performance per watt, which is once again the GPU. Given the clock rates and die sizes, the GPU is obviously using up the vast majority of the system's power, and they've set it up like that because giving those precious watts to the GPU allows it to do more than the CPU would with them.

Replace Nintendo with MS or Sony and the point is still valid. Although their transistor and heat budgets will be much higher, they're still trying to optimise within finite budgets, and with modern GPU technology it makes sense to push the significant majority of transistors and watts to the GPU. Perhaps even more so for MS and Sony, as they're apparently using the more compute-friendly GCN GPU architecture.

Dark_AnNiaLatOr

Member

That makes a lot of sense Thraktor, thanks. That GCN comment just makes me so hyped to know the specs. I just can't believe I'll be seeing Durango ads this time next year, and maybe even Orbis. In one year's time, we'll have gained soooo much knowledge about what we thrive know so bad.

User Name Here

Member

I wonder if people will finally start believing Shin'en (assuming this rumor is true of course)?

"As said before, today’s hardware has bottlenecks with memory throughput when you don’t care about your coding style and data layout. This is true for any hardware and can’t be only cured by throwing more megahertz and cores on it."

So increase memory bandwidth ...

Aren't they doing that with DDR4 or something?

Or is there then some issue with high latency or something?